| NVIDIA GeForce GTS 450 GF106 Video Card |

| Reviews - Featured Reviews: Video Cards | |||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

| Written by Olin Coles | |||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

| Monday, 13 September 2010 | |||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

GeForce GTS 450 Video Card ReviewNVIDIA recently earned its reputation back with the GF104 Fermi-based GeForce GTX 460; a video card that dominated the price point even before it dropped to $179 USD and completely ruled the middle market. Priced to launch at $129, the NVIDIA GeForce GTS 450 packs 192 CUDA cores into its 40nm GF106 Fermi GPU and adds 1GB of GDDR5 memory. Benchmark Reviews overclocked our GTS 450 to nearly 1GHz, and even paired them together in SLI. NVIDIA expects their new GTS 450 to compete against the Radeon HD 5730 at 1680x1050, but we learned from GTX 460 there's usually more performance reach than they suggest. Since the price to performance ratio is critical to this entry-level segment, Benchmark Reviews also tests the NVIDIA GeForce GTS 450 1GB against the more-expensive Radeon HD 5770 using several of the most demanding DirectX-11 PC video games available. The majority of PC gamers either use 1280x1024 or 1680x1050 monitor resolutions, which are considerably less demanding than the 1920x1200 resolution we test upper echelon graphics solutions at. Set to these less-intensive screen resolutions, middle-market video cards are capable of reproducing the same high quality graphics that top-end products do at the higher resolutions. Sure, the game engine matters, but it's the display resolution and post-processing effects that impact performance most. This is what makes a product like NVIDIA's GeForce GTS 450 so relevant to gamers. Of course, the massive overclock it accepts certainly helps to further sell this product into higher price segments. NVIDIA's GeForce GTX 460 uses a pared-down version of the GF104 GPU, which is actually capable of packing eight Streaming Multiprocessors for a total of 384 possible CUDA Cores and 64 Texture Units. GF106 is best conceived as one half of the GF104, allowing it to deliver four SMUs for 192 CUDA cores and 32 Texture Units. The NVIDIA GeForce GTS 450 comes clocked at 783MHz, with shaders operating at 1566. 1GB of 128-bit GDDR5 video frame buffer clocks-in at 902MHz, and works especially well with large environment games like Starcraft II or BattleForge.

NVIDIA GeForce GTS 450 Video CardPC-based video games are still the best way to experience realistic effects and immerse yourself in the battle. Consoles do their part, but only high-precision video cards offer the sharp clarity and definition needed to enjoy detailed graphics. Thanks to the new GF106 GPU, the GeForce GTS 450 video card offers enough headroom for budget-minded overclockers to drive out additional FPS performance while keeping temperatures cool. In this article, Benchmark Reviews tests the GeForce GTS 450 against some of the best video cards within the price segment by using several of the most demanding PC video game titles and benchmark software available: Aliens vs Predator, Battlefield: Bad Company 2, BattleForge, Crysis Warhead, Far Cry 2, Resident Evil 5, and Metro 2033. It used to be that PC video games such as Crysis and Far Cry 2 were as demanding as you could get, but that was all back before DirectX-11 brought tessellation and to the forefront of graphics. DX11 now adds heavy particle and turbulence effects to video games, and titles such as Metro 2033 demand the most powerful graphics processing available. NVIDIA's GF100 GPU was their first graphics processor to support DirectX-11 features such as tessellation and DirectCompute, and the GeForce GTX 400-series offers an excellent combination of performance and value for games like Battlefield: Bad Company 2 or BattleForge. At the center of every new technology is purpose, and NVIDIA has designed their Fermi GF106 GPU with an end-goal of redefining the video game experience through significant graphics processor innovations. Disruptive technology often changes the way users interact with computers, and the GeForce GTS 450 family of video cards are complex tools built to arrive at one simple destination: immersive entertainment at an entry-level price; especially when paired with NVIDIA GeForce 3D Vision. The experience is further improved with NVIDIA System Tools software, which includes NVIDIA Performance Group for GPU overclocking and NVIDIA System Monitor which displays real-time temperatures. These tools help gamers and overclockers get the most out of their investment.

Manufacturer: NVIDIA Corporation Full Disclosure: The product sample used in this article has been provided by NVIDIA. NVIDIA Fermi FeaturesIn today's complex graphics, tessellation offers the means to store massive amounts of coarse geometry, with expand-on-demand functionality. In the NVIDIA GF104 GPU (GF100 series), tessellation also enables more complex animations. In terms of model scalability, dynamic Level of Detail (LOD) allows for quality and performance trade-offs whenever it can deliver better picture quality over performance without penalty. Comprised of three layers (original geometry, tessellation geometry, and displacement map), the final product is far more detailed in shade and data-expansion than if it were constructed with bump-map technology. In plain terms, tessellation gives the peaks and valleys with shadow detail in-between, while previous-generation technology (bump-mapping) would give the illusion of detail.

Stages of Tessellation with NVIDIA Fermi GraphicsUsing GPU-based tessellation, a game developer can send a compact geometric representation of an object or character and the tessellation unit can produce the correct geometric complexity for the specific scene. Consider the "Imp" character illustrated above. On the far left we see the initial quad mesh used to model the general outline of the figure; this representation is quite compact even when compared to typical game assets. The two middle images of the character are created by finely tessellating the description at the left. The result is a very smooth appearance, free of any of the faceting that resulted from limited geometry. Unfortunately this character, while smooth, is no more detailed than the coarse mesh. The final image on the right was created by applying a displacement map to the smoothly tessellated third character to the left. What's new in Fermi?With any new technology, consumers want to know what's new in the product. The goal of this article is to share in-depth information surrounding the Fermi architecture, as well as the new functionality unlocked in GF100. For clarity, the 'GF' letters used in the GF100 GPU name are not an abbreviation for 'GeForce'; they actually denote that this GPU is a Graphics solution based on the Fermi architecture. The next generation of NVIDIA GeForce-series desktop video cards will use the GF100 to promote the following new features:

Tessellation in DirectX-11Control hull shaders run DX11 pre-expansion routines, and operates explicitly in parallel across all points. Domain shaders run post-expansion operations on maps (u/v or x/y/z/w) and is also implicitly parallel. Fixed function tessellation is configured by Level of Detail (LOD) based on output from the control hull shader, and can also produce triangles and lines if requested. Tessellation is something that is new to NVIDIA GPUs, and was not part of GT200 because of geometry bandwidth bottlenecks from sequential rendering/execution semantics. In regard to the GF100-series graphics processor, NVIDIA has added a new PolyMorph and Raster engines to handle world-space processing (PolyMorph) and screen-space processing (Raster). There are eight PolyMorph engines and two Raster engines on the GF104, which depend on an improved L2 cache to keep buffered geometric data produced by the pipeline on-die. Four-Offset Gather4The texture unit on previous processor architectures operated at the core clock of the GPU. On GF104, the texture units run at a higher clock, leading to improved texturing performance for the same number of units. GF104's texture units now add support for DirectX-11's BC6H and BC7 texture compression formats, reducing the memory footprint of HDR textures and render targets. The texture units also support jittered sampling through DirectX-11's four-offset Gather4 feature, allowing four texels to be fetched from a 128×128 pixel grid with a single texture instruction. NVIDIA GF100 series GPUs implements DirectX-11 four-offset Gather4 in hardware, greatly accelerating shadow mapping, ambient occlusion, and post processing algorithms. With jittered sampling, games can implement smoother soft shadows or custom texture filters efficiently. The previous GT200 GPU did not offer coverage samples, while the GF100-series can deliver 32x CSAA. GF106 Compute for GamingAs developers continue to search for novel ways to improve their graphics engines, the GPU will need to excel at a diverse and growing set of graphics algorithms. Since these algorithms are executed via general compute APIs, a robust compute architecture is fundamental to a GPU's graphical capabilities. In essence, one can think of compute as the new programmable shader. GF100's compute architecture is designed to address a wider range of algorithms and to facilitate more pervasive use of the GPU for solving parallel problems. Many algorithms, such as ray tracing, physics, and AI, cannot exploit shared memory-program memory locality is only revealed at runtime. GF106's cache architecture was designed with these problems in mind. With up to 48 KB of L1 cache per Streaming Multiprocessor (SM) and a global L2 cache, threads that access the same memory locations at runtime automatically run faster, irrespective of the choice of algorithm. NVIDIA Codename NEXUS brings CPU and GPU code development together in Microsoft Visual Studio 2008 for a shared process timeline. NEXUS also introduces the first hardware-based shader debugger. NVIDIA GF100-series GPUs are the first to ever offer full C++ support, the programming language of choice among game developers. To ease the transition to GPU programming, NVIDIA developed Nexus, a Microsoft Visual Studio programming environment for the GPU. Together with new hardware features that provide better debugging support, developers will be able enjoy CPU-class application development on the GPU. The end results is C++ and Visual Studio integration that brings HPC users into the same platform of development. NVIDIA offers several paths to deliver compute functionality on the GF106 GPU, such as CUDA C++ for video games. Image processing, simulation, and hybrid rendering are three primary functions of GPU compute for gaming. Using NVIDIA GF100-series GPUs, interactive ray tracing becomes possible for the first time on a standard PC. Ray tracing performance on the NVIDIA GF100 is roughly 4x faster than it was on the GT200 GPU, according to NVIDIA tests. AI/path finding is a compute intensive process well suited for GPUs. The NVIDIA GF100 can handle AI obstacles approximately 3x better than on the GT200. Benefits from this improvement are faster collision avoidance and shortest path searches for higher-performance path finding. NVIDIA GigaThread Thread SchedulerOne of the most important technologies of the Fermi architecture is its two-level, distributed thread scheduler. At the chip level, a global work distribution engine schedules thread blocks to various SMs, while at the SM level, each warp scheduler distributes warps of 32 threads to its execution units. The first generation GigaThread engine introduced in G80 managed up to 12,288 threads in real-time. The Fermi architecture improves on this foundation by providing not only greater thread throughput, but dramatically faster context switching, concurrent kernel execution, and improved thread block scheduling. NVIDIA GF106 GPU Fermi ArchitectureBased on the Fermi architecture, NVIDIA's latest GPU is codenamed GF106 and is equipped on the GeForce GTS 450. In this article, Benchmark Reviews explains the technical architecture behind NVIDIA's GF106 graphics processor and offers an insight into upcoming Fermi-based GeForce video cards. For those who are not familiar, NVIDIA's GF100 GPU was their first graphics processor to support DirectX-11 hardware features such as tessellation and DirectCompute, while also adding heavy particle and turbulence effects. The GF100 GPU is also the successor to the GT200 graphics processor, which launched in the GeForce GTX 280 video card back in June 2008. NVIDIA has since redefined their focus, allowing subsequent GF100, GF104, and now GF106 GPUs to prove their dedication towards next generation gaming effects such as raytracing, order-independent transparency, and fluid simulations. While processor cores have grown from 128 (G80) and 240 (GT200), they reach 512 in the GF100 and earn the title of NVIDIA CUDA (Compute Unified Device Architecture) cores. GF100 was not another incremental GPU step-up like we had going from G80 to GT200. GF100 featured 512 CUDA cores, while GF104 was capable of 336 cores. Effectively cutting the eight SMUs on GF104 in half, NVIDIA's GF106 is good for 192 CUDA cores from four SMUs. The key here is not only the name, but that the name now implies an emphasis on something more than just graphics. Each Fermi CUDA processor core has a fully pipelined integer arithmetic logic unit (ALU) and floating point unit (FPU). GF106 implements the IEEE 754-2008 floating-point standard, providing the fused multiply-add (FMA) instruction for both single and double precision arithmetic. FMA improves over a multiply-add (MAD) instruction by doing the multiplication and addition with a single final rounding step, with no loss of precision in the addition. FMA minimizes rendering errors in closely overlapping triangles. GF106 implements 192 CUDA cores, organized as 8 SMs of 48 cores each. Each SM is a highly parallel multiprocessor supporting up to 32 warps at any given time (four Dispatch Units per SM deliver two dispatched instructions per warp for four total instructions per clock per SM). Each CUDA core is a unified processor core that executes vertex, pixel, geometry, and compute kernels. A unified L2 cache architecture (512KB on 1GB cards) services load, store, and texture operations. GF106 is designed to offer a total of 16 ROP units pixel blending, antialiasing, and atomic memory operations. The ROP units are organized in four groups of eight. Each group is serviced by a 64-bit memory controller. The memory controller, L2 cache, and ROP group are closely coupled-scaling one unit automatically scales the others.

Based on Fermi's third-generation Streaming Multiprocessor (SM) architecture, GF106 could be considered a divided GF104. NVIDIA GeForce GF100-series Fermi GPUs are based on a scalable array of Graphics Processing Clusters (GPCs), Streaming Multiprocessors (SMs), and memory controllers. NVIDIA's GF100 GPU implemented four GPCs, sixteen SMs, and six memory controllers. GF104 implements two GPCs, eight SMs, and four memory controllers. Conversely, GF106 houses one GPC, four SMs, and two memory controllers. Where each SM contained 32 CUDA cores in the GF100, NVIDIA configured GF104 with 48 cores per SM... which has been repeated for GF106. As expected, NVIDIA Fermi-series products are launching with different configurations of GPCs, SMs, and memory controllers to address different price points. CPU commands are read by the GPU via the Host Interface. The GigaThread Engine fetches the specified data from system memory and copies them to the frame buffer. GF106 implements two 64-bit GDDR5 memory controllers (128-bit total) to facilitate high bandwidth access to the frame buffer. The GigaThread Engine then creates and dispatches thread blocks to various SMs. Individual SMs in turn schedules warps (groups of 48 threads) to CUDA cores and other execution units. The GigaThread Engine also redistributes work to the SMs when work expansion occurs in the graphics pipeline, such as after the tessellation and rasterization stages. GF106 Specifications

GeForce 400-Series Specifications

GeForce GTS 450 Partner ProductsNVIDIA sells their graphics technology to a large host of add-in card partners (AIC's), and many are expected to take advantage of GTS 450's competitively focused price point. Companies will offer the GTS 450 with 1GB of GDDR5 video memory. This section offers a preview at the design implementations used by some of the most popular finished-goods companies:

ASUS ENGTS450-DirectCU-TOP-1G Video CardASUS will immediately launch several versions of the GeForce GTS 450, using their ENGTS450 part number. The ASUS ENGTS450-DirectCU-TOP-1G (above) will use an internally exhausting custom cooling solution on this factory-overclocked model.

Gigabyte GV-N450OC-1GI GTS 450 Video CardGigabyte Technology is quickly gaining popularity as a NVIDIA AIC partner, and will have their custom-cooled GTS 450 available at launch. The Gigabyte GV-N450OC-1GI is their internally exhausting factory-overclocked 1GB model.

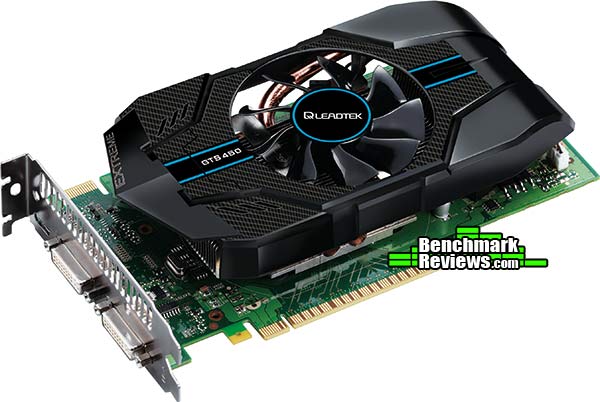

Leadtek Winfast GTS 450 Video CardThe Leadtek Winfast GTS 450 is very similar to the reference version, but shaves off some of the plastic shroud and loses its external exhaust feature on this factory-overclocked model.

MSI N450GTS-Cyclone-1GD5-OC Video CardMSI, the name known my master overclockers world-wide, will have a 1GB GeForce GTS 450 named Cyclone (MSI N450GTS-Cyclone-1GD5-OC) that comes factory overclocked. This video card feature MSI's internally-exhausting 'Cyclone' GPU cooler that loosely resembled the reference design without the plastic shroud.

PNY GTS 450 Overclocked XLR8 Video CardFeaturing an externally-exhausting reference cooling solution, the PNY GTS 450 Overclocked XLR8 video card will come bundled with a copy of Starcraft II.

Sparkle GeForce GTS 450 Passive Video CardThere are few AIC partners offering externally-exhausting versions of the GeForce GTS 450, and even fewer with passive cooling options. The Sparkle GeForce GTS 450 Passive video card is the only silent version we've seen available at launch.

Zotac GeForce GTS 450 AMP! Edition Video CardZOTAC International goes beyond a custom cooling design, and also offers a unique video output selection on their version of the GeForce GTS 450. The ZOTAC GeForce GTS 450 series are the first graphics cards to ship with the new ZOTAC Boost Premium software bundle that features five applications, including vReveal 2.0, Nero Vision Xtra, Cooliris, Kylo and XBMC media center. Look for the ZOTAC GTS 450 AMP! Edition to add DisplayPort to the standard dual-DVI and HDMI output ports. Closer Look: NVIDIA GeForce GTS 450Game developers have had an exciting year thanks to Microsoft DirectX-11 introduced with Windows 7 and updated on Windows Vista. This has allowed video games (released for the PC platform) to look better than ever. DirectX-11 is the leap in video game software development we've been waiting for. Screen Space Ambient Occlusion (SSAO) is given emphasis in DX11, allowing some of the most detailed computer textures gamers have ever seen. Realistic cracks in mud with definable depth and splintered tree bark make the game more realistic, but they also make new demands on the graphics hardware. This new level of graphical detail requires a new level of computer hardware: DX11-compliant hardware. Tessellation adds a tremendous level of strain on the GPU, making previous graphics hardware virtually obsolete with new DX11 game titles. The NVIDIA GeForce GTS 450 video card series offers budget gamers a decent dose of graphics processing power for their money. But the GeForce GTS 450 is more than just a tool for video games; it's also a tool for professional environments that make use of GPGPU-accelerated compute-friendly software, such as Adobe Premier Pro and Photoshop.

The reference NVIDIA GeForce GTS 450 design measure 2.67" tall (double-bay), by 4.376-inches (111.15mm) wide, with a 8.25-inch (209.55mm) long graphics card profile. These dimensions are identical to the recently released GeForce GTX 460 video card, but the GTS 450 will be offered with 1GB of GDDR5 memory as its only frame buffer option. NVIDIA's reference cooler design uses a center-mounted 75mm finsink, which is more than adequate for this middle-market Fermi GF106 GPU. This externally-exhausting cooling solution is most ideal for overclocked computers, or those employing SLI sets. Our temperature results are discussed in detail later in this article.

As with most past GeForce products, the Fermi GPU offers two video output 'lanes', so all three output devices cannot operate at once. NVIDIA has retained two DVI outputs on the GeForce GTS 450, so dual-monitor configurations can be utilized. By adding a second video card users can enjoy GeForce 3D-Vision Surround functionality. Other changes occur in more subtle ways, such as replacing the S-Video connection with a more relevant (mini) HDMI 1.3a A/V output. In past GeForce products, the HDMI port was limited to video-only output and required a separate audio output. Native HDMI 1.3 support is available to the GeForce GTS 450, which allows uncompressed audio/video output to connected HDTVs or compatible monitors.

The 40nm fabrication process opens the die for more transistors; by comparison there are 1.4-billion in GT200 GPU (GeForce GTX 285) while the lower-end GF106 packs 1.17-billion onto the GTS 450. Even with its mid-range intentions, the PCB is a busy place for the GeForce GTS 450. Four (of six possible) DRAM ICs are positioned on the exposed backside of the printed circuit board, and combine for 1GB of GDDR5 video frame buffer memory. These 'Green'-branded GDDR5 components are similar to those used on the other Fermi products, as well as several Radeon products. The Samsung K4G10325FE-HC05 is rated for 1000MHz and 0.5ns response time at 1.5V ± 0.045V VDD/VDDQ.

Despite being approximately half of a mid-range GF104 graphics processor, the GTS 450's GF106 GPU still receives a heavy-duty thermal management system for optimal temperature control. NVIDIA employs a dual-slot cooling system on the reference GTX 460 video card, and by removing four retaining screws the entire heatsink and plastic shroud come away from the circuit board. Two copper heat-pipe rods span away from the copper base into two opposite sets of aluminum fins. The entire unit is cooled with a 75mm fan, which kept our test samples extremely cool at idle and maintained very good cooling once the card received unnaturally high stress loads with FurMark (covered later in this article).

Launching at the affordable $130 retail price point, NVIDIA was wise to support dual-card SLI sets on the GTS 450. Triple-SLI capability is not supported, since the $390 cost of three video cards would be better used to purchase either two GTX 470's or one GTX 480. Benchmark Reviews has tested two GeForce GTS 450's combined into SLI and shared some of the results in this article, but our NVIDIA GeForce GTS 450 SLI Performance Scaling report goes into more detail with additional test comparison. In the next several sections Benchmark Reviews will explain our video card test methodology, followed by a performance comparison of the NVIDIA GeForce GTS 450 against the Radeon 5770 and several of the most popular entry-level graphics accelerators available. VGA Testing MethodologyThe Microsoft DirectX-11 graphics API is native to the Microsoft Windows 7 Operating System, and will be the primary O/S for our test platform. DX11 is also available as a Microsoft Update for the Windows Vista O/S, so our test results apply to both versions of the Operating System. The majority of benchmark tests used in this article are comparative to DX11 performance, however some high-demand DX10 tests have also been included. According to the Steam Hardware Survey published for the month ending May 2010, the most popular gaming resolution is 1280x1024 (17-19" standard LCD monitors). However, because this 1.31MP resolution is considered 'low' by most standards, our benchmark performance tests concentrate on higher-demand resolutions: 1.76MP 1680x1050 (22-24" widescreen LCD) and 2.30MP 1920x1200 (24-28" widescreen LCD monitors). These resolutions are more likely to be used by high-end graphics solutions, such as those tested in this article.

A combination of synthetic and video game benchmark tests have been used in this article to illustrate relative performance among graphics solutions. Our benchmark frame rate results are not intended to represent real-world graphics performance, as this experience would change based on supporting hardware and the perception of individuals playing the video game. DX11 Cost to Performance RatioFor this article Benchmark Reviews has included cost per FPS for graphics performance results. An average of the five least expensive product prices are calculated, which do not consider tax, freight, promotional offers, or rebates into the cost. All prices reflect product series components, and do not represent any specific manufacturer, model, or brand. The retail prices for each product were obtained from NewEgg.com on 11-September-2010:

Intel X58-Express Test System

DirectX-10 Benchmark Applications

DirectX-11 Benchmark Applications

Video Card Test Products

DX10: 3DMark Vantage3DMark Vantage is a PC benchmark suite designed to test the DirectX10 graphics card performance. FutureMark 3DMark Vantage is the latest addition the 3DMark benchmark series built by FutureMark corporation. Although 3DMark Vantage requires NVIDIA PhysX to be installed for program operation, only the CPU/Physics test relies on this technology. 3DMark Vantage offers benchmark tests focusing on GPU, CPU, and Physics performance. Benchmark Reviews uses the two GPU-specific tests for grading video card performance: Jane Nash and New Calico. These tests isolate graphical performance, and remove processor dependence from the benchmark results.

3DMark Vantage GPU Test: Jane NashOf the two GPU tests 3DMark Vantage offers, the Jane Nash performance benchmark is slightly less demanding. In a short video scene the special agent escapes a secret lair by water, nearly losing her shirt in the process. Benchmark Reviews tests this DirectX-10 scene at 1680x1050 and 1920x1200 resolutions, and uses Extreme quality settings with 8x anti-aliasing and 16x anisotropic filtering. The 1:2 scale is utilized, and is the highest this test allows. By maximizing the processing levels of this test, the scene creates the highest level of graphical demand possible and sorts the strong from the weak.

Jane Nash Extreme Quality SettingsCost Analysis: Jane Nash (Extreme)3DMark Vantage GPU Test: New CalicoNew Calico is the second GPU test in the 3DMark Vantage test suite. Of the two GPU tests, New Calico is the most demanding. In a short video scene featuring a galactic battleground, there is a massive display of busy objects across the screen. Benchmark Reviews tests this DirectX-10 scene at 1680x1050 and 1920x1200 resolutions, and uses Extreme quality settings with 8x anti-aliasing and 16x anisotropic filtering. The 1:2 scale is utilized, and is the highest this test allows. Using the highest graphics processing level available allows our test products to separate themselves and stand out (if possible).

New Calico Extreme Quality SettingsCost Analysis: New Calico (Extreme)Test Summary: AMD's Radeon 5750 struggles to complete with NVIDIA's GeForce GTS 450 in the Jane Nash and New Calico tests, performing several FPS below it's price point to create and expensive cost per frame value. 3dMark Vantage has the Radeon HD 5770 split with the GeForce GTS 450. According to Jane Nash test, NVIDIA's GeForce GTS 450 1GB model slightly trails the 5770 by 1.1 FPS. In the New Calico tests we see the GTS 450 overtake the Radeon HD 5770 by 0.6 FPS. Although the price tags on each product are similar, their cost per frame of video performance is not. ATI's Radeon HD 5770 costs $9.68 per frame on average, compared to $8.43 per frame for the GTS 450. The results speak for themselves here, and while FPS rates are comparable it seems that NVIDIA boosts value beyond a cost per frame with their 3D-Vision, PhysX, CUDA, and 32x MSAA feature support. SLI results tease you with the potential of two cards, but our NVIDIA GeForce GTS 450 SLI Performance Scaling article reveals the full details.

DX10: Crysis WarheadCrysis Warhead is an expansion pack based on the original Crysis video game. Crysis Warhead is based in the future, where an ancient alien spacecraft has been discovered beneath the Earth on an island east of the Philippines. Crysis Warhead uses a refined version of the CryENGINE2 graphics engine. Like Crysis, Warhead uses the Microsoft Direct3D 10 (DirectX-10) API for graphics rendering. Benchmark Reviews uses the HOC Crysis Warhead benchmark tool to test and measure graphic performance using the Airfield 1 demo scene. This short test places a high amount of stress on a graphics card because of detailed terrain and textures, but also for the test settings used. Using the DirectX-10 test with Very High Quality settings, the Airfield 1 demo scene receives 4x anti-aliasing and 16x anisotropic filtering to create maximum graphic load and separate the products according to their performance. Using the highest quality DirectX-10 settings with 4x AA and 16x AF, only the most powerful graphics cards are expected to perform well in our Crysis Warhead benchmark tests. DirectX-11 extensions are not supported in Crysis: Warhead, and SSAO is not an available option.

Crysis Warhead GPU-Appropriate Quality SettingsCost Analysis: Crysis Warhead (Normal)Test Summary: With 'high' quality settings, Crysis Warhead is still borderline 'extreme' for lower-end video cards. This forces the Radeon HD 5750 down to 21 FPS, while the GeForce GTS 450 and Radeon HD 5770 match each other for 25 FPS. Pushed with a massive overclock, the GTS 450 earns an additional 5 FPS for a total of 30 FPS in this test. Now let's see how higher settings and SLI impact performance...

Crysis Warhead Extreme Quality SettingsCost Analysis: Crysis Warhead (Extreme)Test Summary: The CryENGINE2 graphics engine used in Crysis Warhead responds well to both ATI and NVIDIA products, which allows the 1GB NVIDIA GeForce GTS 450 nearly match performance with ATI's Radeon HD 5770 at 1680x1050 and beat it by 2 FPS once overclocked. This allows a favorable price to performance ratio for the GeForce GTS 450, although the GTX 460 still delivers maximum value. For die-hard fans of Crysis, two GeForce GTS 450's in SLI offer an excellent performance advantage over the more-expensive Radeon HD 5850.

|

|||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

| Graphics Card | GeForce 9800 GTX+ | Radeon HD4890 | GeForce GTX285 | Radeon HD5750 | Radeon HD5770 | GeForce GTS450 | Radeon HD5830 | GeForce GTX460 | Radeon HD5850 | GeForce GTX470 |

| GPU Cores | 128 | 800 | 240 | 720 | 800 | 192 | 1120 | 336 | 1440 | 448 |

| Core Clock (MHz) | 740 | 850 | 670 | 700 | 850 | 783 | 800 | 675 | 725 | 608 |

| Shader Clock (MHz) | 1836 | N/A | 1550 | N/A | N/A | 1566 | N/A | 1350 | N/A | 1215 |

| Memory Clock (MHz) | 1100 | 975 | 1300 | 1150 | 1200 | 902 | 1000 | 900 | 1000 | 837 |

| Memory Amount | 512 MB GDDR3 | 1024 MB GDDR5 | 1024MB GDDR3 | 1024MB GDDR5 | 1024MB GDDR5 | 1024 MB GDDR5 | 1024MB GDDR5 | 768MB GDDR5 | 1024MB GDDR5 | 1280MB GDDR5 |

| Memory Interface | 256-bit | 128-bit | 512-bit | 128-bit | 128-bit | 128-bit | 256-bit | 192-bit | 256-bit | 320-bit |

DX10: Resident Evil 5

Built upon an advanced version of Capcom's proprietary MT Framework game engine to deliver DirectX-10 graphic detail, Resident Evil 5 offers gamers non-stop action similar to Devil May Cry 4, Lost Planet, and Dead Rising. The MT Framework is an exclusive seventh generation game engine built to be used with games developed for the PlayStation 3 and Xbox 360, and PC ports. MT stands for "Multi-Thread", "Meta Tools" and "Multi-Target". Games using the MT Framework are originally developed on the PC and then ported to the other two console platforms.

On the PC version of Resident Evil 5, both DirectX 9 and DirectX-10 modes are available for Microsoft Windows XP and Vista Operating Systems. Microsoft Windows 7 will play Resident Evil with backwards compatible Direct3D APIs. Resident Evil 5 is branded with the NVIDIA The Way It's Meant to be Played (TWIMTBP) logo, and receives NVIDIA GeForce 3D Vision functionality enhancements.

NVIDIA and Capcom offer the Resident Evil 5 benchmark demo for free download from their website, and Benchmark Reviews encourages visitors to compare their own results to ours. Because the Capcom MT Framework game engine is very well optimized and produces high frame rates, Benchmark Reviews uses the DirectX-10 version of the test at 1920x1200 resolution. Super-High quality settings are configured, with 8x MSAA post processing effects for maximum demand on the GPU. Test scenes from Area #3 and Area #4 require the most graphics processing power, and the results are collected for the chart illustrated below.

- Resident Evil 5 Benchmark

- Extreme Settings: (Super-High Quality, 8x MSAA)

Resident Evil 5 Extreme Quality Settings

Resident Evil 5 has really proved how well the proprietary Capcom MT Framework game engine can look with DirectX-10 effects. The Area 3 and 4 tests are the most graphically demanding from this free downloadable demo benchmark, but the results make it appear that the Area #3 test scene performs better with NVIDIA GeForce products compared to the Area #4 scene that levels the field and gives ATI Radeon GPUs a fair representation.

Cost Analysis: Resident Evil 5 (Area 4)

Test Summary: It's unclear if Resident Evil 5 graphics performance fancies ATI or NVIDIA, especially since two different test scenes alternate favoritism. Although this benchmark tool is distributed directly from NVIDIA, and Forceware drivers likely have optimizations written for the Resident Evil 5 game, there doesn't appear to be any decisive tilt towards GeForce products over ATI Radeon counterparts from within the game itself. Test scene #3 certainly favors Fermi GPU's, and they leads ahead of every other product tested. In test scene #4 the Radeon video card series appears more competitive, although the 1GB GeForce GTS 450 doesn't outperform a Radeon HD 5770 the way it did in scene #3. Nevertheless, the GTS 450 offers a slight competitive edge for the better cost per frame.

| Graphics Card | GeForce 9800 GTX+ | Radeon HD4890 | GeForce GTX285 | Radeon HD5750 | Radeon HD5770 | GeForce GTS450 | Radeon HD5830 | GeForce GTX460 | Radeon HD5850 | GeForce GTX470 |

| GPU Cores | 128 | 800 | 240 | 720 | 800 | 192 | 1120 | 336 | 1440 | 448 |

| Core Clock (MHz) | 740 | 850 | 670 | 700 | 850 | 783 | 800 | 675 | 725 | 608 |

| Shader Clock (MHz) | 1836 | N/A | 1550 | N/A | N/A | 1566 | N/A | 1350 | N/A | 1215 |

| Memory Clock (MHz) | 1100 | 975 | 1300 | 1150 | 1200 | 902 | 1000 | 900 | 1000 | 837 |

| Memory Amount | 512 MB GDDR3 | 1024 MB GDDR5 | 1024MB GDDR3 | 1024MB GDDR5 | 1024MB GDDR5 | 1024 MB GDDR5 | 1024MB GDDR5 | 768MB GDDR5 | 1024MB GDDR5 | 1280MB GDDR5 |

| Memory Interface | 256-bit | 128-bit | 512-bit | 128-bit | 128-bit | 128-bit | 256-bit | 192-bit | 256-bit | 320-bit |

DX10: Street Fighter IV

Capcom's Street Fighter IV is part of the now-famous Street Fighter series that began in 1987. The 2D Street Fighter II was one of the most popular fighting games of the 1990s, and now gets a 3D face-lift to become Street Fighter 4. The Street Fighter 4 benchmark utility was released as a novel way to test your system's ability to run the game. It uses a few dressed-up fight scenes where combatants fight against each other using various martial arts disciplines. Feet, fists and magic fill the screen with a flurry of activity. Due to the rapid pace, varied lighting and the use of music this is one of the more enjoyable benchmarks.

Street Fighter IV uses a proprietary Capcom SF4 game engine, which is enhanced over previous versions of the game. In terms of 3D graphical demand, Street Fighter IV is considered very low-end for most desktop GPUs. While modern desktop computers with discrete graphics have no problem playing Street Fighter IV at its highest graphical settings, integrated and mobile GPUs have a difficult time producing playable frame rates with the lowest settings configured.

- Street Figher IV Benchmark

- Extreme Settings: (Highest Quality, 8x AA, 16x AF, Parallel Rendering)

Street Fighter IV Extreme Quality Settings

Cost Analysis: Street Fighter IV (Extreme)

Test Summary: Street Fighter IV is a fast-action game that doesn't require a large video frame buffer for it's rather small maps, but instead prefers power graphics processing to keep up with commands. Configured with the highest quality settings and maximum post-processing effects, Street Fighter IV still delivers excellent frame rates on our lower-end video cards. AMD's Radeon HD 5750 produced 62.4 FPS, which was immediately overtaken by NVIDIA's GeForce GTS 450. With 90.7 FPS before any overclock, the GTS 450 surpassed the Radeon HD 5770's 80.0 FPS and then improves the lead to 99.1 FPS once overclocked. Street Fighter IV is one of those games that relies more on intense player involvement than special effects, although you wouldn't know it from watching the colorful players and backgrounds.

| Graphics Card | GeForce 9800 GTX+ | Radeon HD4890 | GeForce GTX285 | Radeon HD5750 | Radeon HD5770 | GeForce GTS450 | Radeon HD5830 | GeForce GTX460 | Radeon HD5850 | GeForce GTX470 |

| GPU Cores | 128 | 800 | 240 | 720 | 800 | 192 | 1120 | 336 | 1440 | 448 |

| Core Clock (MHz) | 740 | 850 | 670 | 700 | 850 | 783 | 800 | 675 | 725 | 608 |

| Shader Clock (MHz) | 1836 | N/A | 1550 | N/A | N/A | 1566 | N/A | 1350 | N/A | 1215 |

| Memory Clock (MHz) | 1100 | 975 | 1300 | 1150 | 1200 | 902 | 1000 | 900 | 1000 | 837 |

| Memory Amount | 512 MB GDDR3 | 1024 MB GDDR5 | 1024MB GDDR3 | 1024MB GDDR5 | 1024MB GDDR5 | 1024 MB GDDR5 | 1024MB GDDR5 | 768MB GDDR5 | 1024MB GDDR5 | 1280MB GDDR5 |

| Memory Interface | 256-bit | 128-bit | 512-bit | 128-bit | 128-bit | 128-bit | 256-bit | 192-bit | 256-bit | 320-bit |

DX11: Aliens vs Predator

Aliens vs. Predator is a science fiction first-person shooter video game, developed by Rebellion, and published by Sega for Microsoft Windows, Sony PlayStation 3, and Microsoft Xbox 360. Aliens vs. Predator utilizes Rebellion's proprietary Asura game engine, which had previously found its way into Call of Duty: World at War and Rogue Warrior. The self-contained benchmark tool is used for our DirectX-11 tests, which push the Asura game engine to its limit.

In our benchmark tests, Aliens vs. Predator was configured to use the highest quality settings with 4x AA and 16x AF. DirectX-11 features such as Screen Space Ambient Occlusion (SSAO) and tessellation have also been included, along with advanced shadows.

- Aliens vs Predator

- GPU-Appropriate Settings: (High Quality, Low Shadows, 2x AA, 16x AF, SSAO, Tessellation)

- Extreme Settings: (Very High Quality, 4x AA, 16x AF, SSAO, Tessellation, Advanced Shadows)

Aliens vs Predator GPU-Appropriate Quality Settings

Cost Analysis: Aliens vs Predator (Normal)

Test Summary: Configured with high textures and low shadows with reduced post-processing effects, the intense action scenes in Aliens vs Predator still generated strained frame rates for the lower-end. These settings position the GeForce GTS 450 (25.4 FPS) directly between the Radeon HD 5750 (22.2 FPS) and Radeon HD 5770 (27.3 FPS). Once overclocked the GTS 450 caught up with the 5770 and produced 26.6 FPS, although the stock speeds still helped the GTS 450 produce the best cost per frame of the group.

Aliens vs Predator Extreme Quality Settings

Cost Analysis: Aliens vs Predator (Extreme)

Test Summary: Aliens vs Predator may use the well-known Asura game engine, but DirectX-11 extensions push the graphical demand on this game to levels eclipsed only by Mafia-II or Metro 2033 (and possibly equivalent to DX10 Crysis). With an unbiased appetite for raw DirectX-11 graphics performance, Aliens vs Predator accepts ATI and NVIDIA products as equal contenders. When high-strain SSAO is called into action, the 1GB GeForce GTS 450 demonstrates how well Fermi is suited for DX11, coming within a frame of the more expensive ATI Radeon HD 5770 at stock speeds but pulling ahead to 20.5 FPS when overclocked. Because performance is so close between the two products, NVIDIA's less expensive GTS 450 enjoys a decent cost per FPS advantage. Combined into a GTS 450 SLI set, two video cards match performance with the Radeon HD 5850 in Aliens vs Predator.

| Graphics Card | GeForce 9800 GTX+ | Radeon HD4890 | GeForce GTX285 | Radeon HD5750 | Radeon HD5770 | GeForce GTS450 | Radeon HD5830 | GeForce GTX460 | Radeon HD5850 | GeForce GTX470 |

| GPU Cores | 128 | 800 | 240 | 720 | 800 | 192 | 1120 | 336 | 1440 | 448 |

| Core Clock (MHz) | 740 | 850 | 670 | 700 | 850 | 783 | 800 | 675 | 725 | 608 |

| Shader Clock (MHz) | 1836 | N/A | 1550 | N/A | N/A | 1566 | N/A | 1350 | N/A | 1215 |

| Memory Clock (MHz) | 1100 | 975 | 1300 | 1150 | 1200 | 902 | 1000 | 900 | 1000 | 837 |

| Memory Amount | 512 MB GDDR3 | 1024 MB GDDR5 | 1024MB GDDR3 | 1024MB GDDR5 | 1024MB GDDR5 | 1024 MB GDDR5 | 1024MB GDDR5 | 768MB GDDR5 | 1024MB GDDR5 | 1280MB GDDR5 |

| Memory Interface | 256-bit | 128-bit | 512-bit | 128-bit | 128-bit | 128-bit | 256-bit | 192-bit | 256-bit | 320-bit |

DX11: Battlefield Bad Company 2

The Battlefield franchise has been known to demand a lot from PC graphics hardware. DICE (Digital Illusions CE) has incorporated their Frostbite-1.5 game engine with Destruction-2.0 feature set with Battlefield: Bad Company 2. Battlefield: Bad Company 2 features destructible environments using Frostbit Destruction-2.0, and adds gravitational bullet drop effects for projectiles shot from weapons at a long distance. The Frostbite-1.5 game engine used on Battlefield: Bad Company 2 consists of DirectX-10 primary graphics, with improved performance and softened dynamic shadows added for DirectX-11 users.

At the time Battlefield: Bad Company 2 was published, DICE was also working on the Frostbite-2.0 game engine. This upcoming engine will include native support for DirectX-10.1 and DirectX-11, as well as parallelized processing support for 2-8 parallel threads. This will improve performance for users with an Intel Core-i7 processor. Unfortunately, the Extreme Edition Intel Core i7-980X six-core CPU with twelve threads will not see full utilization.

In our benchmark tests of Battlefield: Bad Company 2, the first three minutes of action in the single-player raft night scene are captured with FRAPS. Relative to the online multiplayer action, these frame rate results are nearly identical to daytime maps with the same video settings. The Frostbite-1.5 game engine in Battlefield: Bad Company 2 appears to equalize our test set of video cards, and despite AMD's sponsorship of the game it still plays well using any brand of graphics card.

- BattleField: Bad Company 2

- GPU-Appropriate Settings: (High Quality, Low Shadows, HBAO, 2x AA, 4x AF, 180s Fraps Single-Player Intro Scene)

- Extreme Settings: (Highest Quality, HBAO, 8x AA, 16x AF, 180s Fraps Single-Player Intro Scene)

Battlefield Bad Company 2 GPU-Appropriate Quality Settings

Cost Analysis: Battlefield: Bad Company 2 (Normal)

Test Summary: Using GPU-appropriate settings similar to those gamers will select, the Frostbite-1.5 game delivers great visual quality on products at the lower end. The Radeon HD 5750 performs well once shadows and post-processing effects are dialed down, producing 37.8 FPS. NVIDIA's GeForce GTS 450 takes advantage of these less-strenuous settings, and builds a 7.6 FPS lead over the 5750. Once overclocked the GTS 450 tacks on an additional 9 FPS, and is able to outperform the Radeon HD 5770 with ease.

Battlefield Bad Company 2 Extreme Quality Settings

Cost Analysis: Battlefield: Bad Company 2 (Extreme)

Test Summary: Our extreme-quality tests use maximum settings for Battlefield: Bad Company 2, and so users who dial down the anti-aliasing or use a lower resolution will have much better frame rate performance. BF:BC2 gives the ATI Radeon HD 5770 a decent 6.2 FPS lead over the less expensive GTS 450, yet the cost per FPS amounts to roughly the same figure. Once overclocked, the GTS 450 comes back to match the Radeon HD 5770 with 39.0 FPS. Although not a focus of this review, GeForce GTS 450 SLI scaling delivers an impressive 94% combined total of two individual graphics cards, giving the more expensive Radeon HD 5850 a run for the money. Despite the drop in DX10 performance compared to the more expensive 5770, relative price per frame costs are roughly the same.

| Graphics Card | GeForce 9800 GTX+ | Radeon HD4890 | GeForce GTX285 | Radeon HD5750 | Radeon HD5770 | GeForce GTS450 | Radeon HD5830 | GeForce GTX460 | Radeon HD5850 | GeForce GTX470 |

| GPU Cores | 128 | 800 | 240 | 720 | 800 | 192 | 1120 | 336 | 1440 | 448 |

| Core Clock (MHz) | 740 | 850 | 670 | 700 | 850 | 783 | 800 | 675 | 725 | 608 |

| Shader Clock (MHz) | 1836 | N/A | 1550 | N/A | N/A | 1566 | N/A | 1350 | N/A | 1215 |

| Memory Clock (MHz) | 1100 | 975 | 1300 | 1150 | 1200 | 902 | 1000 | 900 | 1000 | 837 |

| Memory Amount | 512 MB GDDR3 | 1024 MB GDDR5 | 1024MB GDDR3 | 1024MB GDDR5 | 1024MB GDDR5 | 1024 MB GDDR5 | 1024MB GDDR5 | 768MB GDDR5 | 1024MB GDDR5 | 1280MB GDDR5 |

| Memory Interface | 256-bit | 128-bit | 512-bit | 128-bit | 128-bit | 128-bit | 256-bit | 192-bit | 256-bit | 320-bit |

DX11: BattleForge

BattleForge is free Massive Multiplayer Online Role Playing Game (MMORPG) developed by EA Phenomic with DirectX-11 graphics capability. Combining strategic cooperative battles, the community of MMO games, and trading card gameplay, BattleForge players are free to put their creatures, spells and buildings into combination's they see fit. These units are represented in the form of digital cards from which you build your own unique army. With minimal resources and a custom tech tree to manage, the gameplay is unbelievably accessible and action-packed.

Benchmark Reviews uses the built-in graphics benchmark to measure performance in BattleForge, using Very High quality settings (detail) and 8x anti-aliasing with auto multi-threading enabled. BattleForge is one of the first titles to take advantage of DirectX-11 in Windows 7, and offers a very robust color range throughout the busy battleground landscape. The charted results illustrate how performance measures-up between video cards when Screen Space Ambient Occlusion (SSAO) is enabled.

- BattleForge v1.2

- GPU-Appropriate Settings: (High Quality, Medium Shadows, 2x Anti-Aliasing, Auto Multi-Thread)

- Extreme Settings: (Very High Quality, 8x Anti-Aliasing, Auto Multi-Thread)

BattleForge GPU-Appropriate Quality Settings

Cost Analysis: BattleForge (Normal)

Test Summary: Sometimes a video card handles light workloads better than intensive processing routines, and AMD knows their product limitations quite well. A combination of lower settings and AMD co-development allow BattleForge to give Radeon products a boost in frame rate performance. Without heavy post-processing effects or SSAO to weigh down the GPUs, BattleForge positions the Radeon HD 5750 ahead of the GeForce GTS 450. Things looks a lot different when the settings are turned up high...

BattleForge Extreme Quality Settings

Cost Analysis: BattleForge (Extreme)

Test Summary: With settings turned to their highest quality, the NVIDIA GeForce GTS 450 closely matched performance to the more expensive Radeon HD 5770, and delivers a generous lead in cost per frame value. Overclocked to 950MHz, the GTS 450 improved it position to 29.0 FPS and beat the Radeon HD 5830. Two GTS 450's combined into SLI actually yield a 13.4% improvement over the combined FPS of two separate cards, and also outperforms the Radeon HD 5850 by 4.4 FPS. Read our full NVIDIA GeForce GTS 450 SLI Performance Scaling report to see what else a pair of these cards can beat.

| Graphics Card | GeForce 9800 GTX+ | Radeon HD4890 | GeForce GTX285 | Radeon HD5750 | Radeon HD5770 | GeForce GTS450 | Radeon HD5830 | GeForce GTX460 | Radeon HD5850 | GeForce GTX470 |

| GPU Cores | 128 | 800 | 240 | 720 | 800 | 192 | 1120 | 336 | 1440 | 448 |

| Core Clock (MHz) | 740 | 850 | 670 | 700 | 850 | 783 | 800 | 675 | 725 | 608 |

| Shader Clock (MHz) | 1836 | N/A | 1550 | N/A | N/A | 1566 | N/A | 1350 | N/A | 1215 |

| Memory Clock (MHz) | 1100 | 975 | 1300 | 1150 | 1200 | 902 | 1000 | 900 | 1000 | 837 |

| Memory Amount | 512 MB GDDR3 | 1024 MB GDDR5 | 1024MB GDDR3 | 1024MB GDDR5 | 1024MB GDDR5 | 1024 MB GDDR5 | 1024MB GDDR5 | 768MB GDDR5 | 1024MB GDDR5 | 1280MB GDDR5 |

| Memory Interface | 256-bit | 128-bit | 512-bit | 128-bit | 128-bit | 128-bit | 256-bit | 192-bit | 256-bit | 320-bit |

DX9+SSAO: Mafia II

Mafia II is a single-player third-person action shooter developed by 2K Czech for 2K Games, and is the sequel to Mafia: The City of Lost Heaven released in 2002. Players assume the life of World War II veteran Vito Scaletta, the son of small Sicilian family who immigrates to Empire Bay. Growing up in the slums of Empire Bay teaches Vito about crime, and he's forced to join the Army in lieu of jail time. After sustaining wounds in the war, Vito returns home and quickly finds trouble as he again partners with his childhood friend and accomplice Joe Barbaro. Vito and Joe combine their passion for fame and riches to take on the city, and work their way to the top in Mafia II.

Mafia II is a DirectX-9/10/11 compatible PC video game built on 2K Czech's proprietary Illusion game engine, which succeeds the LS3D game engine used in Mafia: The City of Lost Heaven. In our Mafia-II Video Game Performance article, Benchmark Reviews explored characters and gameplay while illustrating how well this game delivers APEX PhysX features on both ATI and NVIDIA products. Thanks to DirectX-11 APEX PhysX extensions that can be processed by the system's CPU, Mafia II offers gamers is equal access to high-detail physics regardless of video card manufacturer.

- Mafia II

- GPU-Appropriate Settings: (Antialiasing, 8x AF, Low Shadow Quality, High Detail, Ambient Occlusion, Medium APEX PhysX)

Mafia II GPU-Appropriate Quality Settings

Cost Analysis: Mafia II (Normal)

Test Summary: Of all the video games presently available for DirectX-11 platforms, Mafia II is by far one of the most details and feature-rich. 2K Games were wise to enable PhysX processing to by done on the CPU for Radeon users, which lets the game look its best regardless of branding. Still, we already know how much better video game physics are calculated by hundreds of GPU cores compared to a pair of CPU cores. As a result, NVIDIA's GeForce GTS 450 allows the Fermi GF106 GPU to produce great quality APEX PhysX effects while displaying frame rates superior to AMD's Radeon HD 5750 and HD 5770. Clearly dominated by the GeForce GTS 450, the overclocked GF106 adds enough extra power to enjoy considerably high-quality settings and post-processing effects that comparable Radeon products cannot.

On a side note, Mafia 2 is absolutely phenomenal with 3D-Vision... and with its built-in multi-monitor profiles and bezel correction already factored this game is well suited for 3D-Vision Surround. Combining two GeForce GTS 450's into SLI allowed this game to play at 5760 x 1080 resolution across three monitors using the same settings as tested above, delivering a thoroughly impressive experience. If you already own a 3D Vision kit and 120Hz monitor, Mafia II was built with 3D Vision in mind. If purchasing the equipment is within your reach (I suggest the ASUS VG236H model that comes with a NVIDIA 3D-Vision kit enclosed), you owe to yourself to experience this game the way it was intended: in 3D.

| Graphics Card | GeForce 9800 GTX+ | Radeon HD4890 | GeForce GTX285 | Radeon HD5750 | Radeon HD5770 | GeForce GTS450 | Radeon HD5830 | GeForce GTX460 | Radeon HD5850 | GeForce GTX470 |

| GPU Cores | 128 | 800 | 240 | 720 | 800 | 192 | 1120 | 336 | 1440 | 448 |

| Core Clock (MHz) | 740 | 850 | 670 | 700 | 850 | 783 | 800 | 675 | 725 | 608 |

| Shader Clock (MHz) | 1836 | N/A | 1550 | N/A | N/A | 1566 | N/A | 1350 | N/A | 1215 |

| Memory Clock (MHz) | 1100 | 975 | 1300 | 1150 | 1200 | 902 | 1000 | 900 | 1000 | 837 |

| Memory Amount | 512 MB GDDR3 | 1024 MB GDDR5 | 1024MB GDDR3 | 1024MB GDDR5 | 1024MB GDDR5 | 1024 MB GDDR5 | 1024MB GDDR5 | 768MB GDDR5 | 1024MB GDDR5 | 1280MB GDDR5 |

| Memory Interface | 256-bit | 128-bit | 512-bit | 128-bit | 128-bit | 128-bit | 256-bit | 192-bit | 256-bit | 320-bit |

DX11: Metro 2033

Metro 2033 is an action-oriented video game with a combination of survival horror, and first-person shooter elements. The game is based on the novel Metro 2033 by Russian author Dmitry Glukhovsky. It was developed by 4A Games in Ukraine and released in March 2010 for Microsoft Windows. Metro 2033 uses the 4A game engine, developed by 4A Games. The 4A Engine supports DirectX-9, 10, and 11, along with NVIDIA PhysX and GeForce 3D Vision.

The 4A engine is multi-threaded in such that only PhysX had a dedicated thread, and uses a task-model without any pre-conditioning or pre/post-synchronizing, allowing tasks to be done in parallel. The 4A game engine can utilize a deferred shading pipeline, and uses tessellation for greater performance, and also has HDR (complete with blue shift), real-time reflections, color correction, film grain and noise, and the engine also supports multi-core rendering.

Metro 2033 featured superior volumetric fog, double PhysX precision, object blur, sub-surface scattering for skin shaders, parallax mapping on all surfaces and greater geometric detail with a less aggressive LODs. Using PhysX, the engine uses many features such as destructible environments, and cloth and water simulations, and particles that can be fully affected by environmental factors.

NVIDIA has been diligently working to promote Metro 2033, and for good reason: it is the most demanding PC video game we've ever tested. When their flagship GeForce GTX 480 struggles to produce 27 FPS with DirectX-11 anti-aliasing turned two to its lowest setting, you know that only the strongest graphics processors will generate playable frame rates. All of our tests enable Advanced Depth of Field and Tessellation effects, but disable advanced PhysX options.

- Metro 2033

- GPU-Appropriate Settings: (High Quality, AAA, 4x AF, Tessellation, Benchmark Tool)

- Extreme Settings: (Very-High Quality, AAA, 16x AF, Advanced DoF, Tessellation, 180s Fraps Chase Scene)

Metro 2033 GPU-Appropriate Quality Settings

Cost Analysis: Metro 2033 (Normal)

Test Summary: There's no way to ignore the graphical demands of Metro 2033, and only the most powerful GPUs will deliver a decent visual experience unless you're willing to seriously tone-down the settings. Even when these settings are turned down, Metro 2033 is a power-hungry video game that crushes frame rates. NVIDIA's GeForce GTS 450 was able to outperform the Radeon HD 5750, but needed the added boost of an overclock to match performance with the Radeon 5770. Despite the difference in frame rates, all three entry level products offer similar price to performance values. Quality settings will need to be reduced down to medium levels and advanced depth of field disabled for adequate game play performance using the GTS 450, although PhysX effects may be worth the small performance penalty. The test above used the new Metro 2033 benchmark tool, while the 'extreme' tests below use the more intensive 'Chase' level with Fraps...

Metro 2033 Extreme Quality Settings

Cost Analysis: Metro 2033 (Extreme)

Test Summary: The GeForce GTS 450 survived our stress test despite using Advanced Depth of Field and Tessellation effects. While stock GTS 450 performance trailed the Radeon HD 5770 by 2.3 FPS, cost per frame value was very similar and overclocking allowed the GTS 450 to match FPS performance with 16 FPS. As we've seen in other tests, placing two GTS 450s together in SLI delivered performance equal or greater than a single Radeon HD 5850. For reference, two GeForce GTX 480's combined in SLI produce only 46 FPS in the 'Chase' level used for producing benchmark results. Metro 2033 only offers advanced PhysX options to NVIDIA GeForce video cards.

| Graphics Card | GeForce 9800 GTX+ | Radeon HD4890 | GeForce GTX285 | Radeon HD5750 | Radeon HD5770 | GeForce GTS450 | Radeon HD5830 | GeForce GTX460 | Radeon HD5850 | GeForce GTX470 |

| GPU Cores | 128 | 800 | 240 | 720 | 800 | 192 | 1120 | 336 | 1440 | 448 |

| Core Clock (MHz) | 740 | 850 | 670 | 700 | 850 | 783 | 800 | 675 | 725 | 608 |

| Shader Clock (MHz) | 1836 | N/A | 1550 | N/A | N/A | 1566 | N/A | 1350 | N/A | 1215 |

| Memory Clock (MHz) | 1100 | 975 | 1300 | 1150 | 1200 | 902 | 1000 | 900 | 1000 | 837 |

| Memory Amount | 512 MB GDDR3 | 1024 MB GDDR5 | 1024MB GDDR3 | 1024MB GDDR5 | 1024MB GDDR5 | 1024 MB GDDR5 | 1024MB GDDR5 | 768MB GDDR5 | 1024MB GDDR5 | 1280MB GDDR5 |

| Memory Interface | 256-bit | 128-bit | 512-bit | 128-bit | 128-bit | 128-bit | 256-bit | 192-bit | 256-bit | 320-bit |

DX11: Unigine Heaven 2.1

The Unigine "Heaven 2.1" benchmark is a free publicly available tool that grants the power to unleash the graphics capabilities in DirectX-11 for Windows 7 or updated Vista Operating Systems. It reveals the enchanting magic of floating islands with a tiny village hidden in the cloudy skies. With the interactive mode, emerging experience of exploring the intricate world is within reach. Through its advanced renderer, Unigine is one of the first to set precedence in showcasing the art assets with tessellation, bringing compelling visual finesse, utilizing the technology to the full extend and exhibiting the possibilities of enriching 3D gaming.

The distinguishing feature in the Unigine Heaven benchmark is a hardware tessellation that is a scalable technology aimed for automatic subdivision of polygons into smaller and finer pieces, so that developers can gain a more detailed look of their games almost free of charge in terms of performance. Thanks to this procedure, the elaboration of the rendered image finally approaches the boundary of veridical visual perception: the virtual reality transcends conjured by your hand.

Although Heaven-2.1 was recently released and used for our DirectX-11 tests, the benchmark results were extremely close to those obtained with Heaven-1.0 testing. Since only DX11-compliant video cards will properly test on the Heaven benchmark, only those products that meet the requirements have been included.

-

Unigine Heaven Benchmark 2.1

- GPU-Appropriate Settings: (High Quality, Moderate Tessellation, 4x AF, 2x AA)

- Extreme Settings: (High Quality, Normal Tessellation, 16x AF, 4x AA

Heaven 2.1 GPU-Appropriate Quality Settings

Cost Analysis: Heaven 2.1 (Normal)

Test Summary: Reviewers like to say "Nobody plays a benchmark", but it seems evident that we can expect to see great things come from a graphics tool this detailed. For now though, those details only come by way of DirectX-11 video cards. Since Heaven is a very demanding benchmark tool, our entry-level video cards require more appropriate settings: moderate tessellation and lower post-processing effects. Reducing quality and effects allowed the Radeon HD 5750 to produce 17.8 FPS, which was subsequently outperformed with 23.7 FPS from the GTS 450. Trailing behind by 1.3 FPS was the Radeon HD 5770, which slips lower when compared to 27.9 FPS produced by an overclocked GTS 450. Now let's see how higher settings and SLI impact performance...

Heaven 2.1 Extreme Quality Settings

Cost Analysis: Unigine Heaven (Extreme)

Test Summary: Our 'extreme' test results with the Unigine Heaven benchmark tool appear to deliver fair comparisons of DirectX-11 graphics cards when set to higher quality levels. NVIDIA's GeForce GTS 450 1GB outperforms the Radeon HD 5770 by a mere 1.2 FPS, but that same less-expensive GTS 450 delivers a crushing advantage over the 5770 in terms of price to performance ratio. Looked at differently, the GeForce GTS 450 delivers a 7% video performance advantage while producing a 24% lead in price to performance value. Overclocked, the GTS 450 further extends its lead over the Radeon 5770 by 15%. SLI performance scaling has come a long way, and a pair of GeForce GTS 450's in SLI dominate the Radeon HD 5850 by nearly 11 FPS.

| Graphics Card | GeForce 9800 GTX+ | Radeon HD4890 | GeForce GTX285 | Radeon HD5750 | Radeon HD5770 | GeForce GTS450 | Radeon HD5830 | GeForce GTX460 | Radeon HD5850 | GeForce GTX470 |

| GPU Cores | 128 | 800 | 240 | 720 | 800 | 192 | 1120 | 336 | 1440 | 448 |

| Core Clock (MHz) | 740 | 850 | 670 | 700 | 850 | 783 | 800 | 675 | 725 | 608 |

| Shader Clock (MHz) | 1836 | N/A | 1550 | N/A | N/A | 1566 | N/A | 1350 | N/A | 1215 |

| Memory Clock (MHz) | 1100 | 975 | 1300 | 1150 | 1200 | 902 | 1000 | 900 | 1000 | 837 |

| Memory Amount | 512 MB GDDR3 | 1024 MB GDDR5 | 1024MB GDDR3 | 1024MB GDDR5 | 1024MB GDDR5 | 1024 MB GDDR5 | 1024MB GDDR5 | 768MB GDDR5 | 1024MB GDDR5 | 1280MB GDDR5 |

| Memory Interface | 256-bit | 128-bit | 512-bit | 128-bit | 128-bit | 128-bit | 256-bit | 192-bit | 256-bit | 320-bit |

NVIDIA APEX PhysX Enhancements

Mafia II is the first PC video game title to include the new NVIDIA APEX PhysX framework, a powerful feature set that only GeForce video cards are built do deliver. While console versions will make use of PhysX, only the PC version supports NVIDIA's APEX PhysX physics modeling engine, which adds the following features: APEX Destruction, APEX Clothing, APEX Vegetation, and APEX Turbulence. PhysX helps make object movement more fluid and lifelike, such as cloth and debris. In this section, Benchmark Reviews details the differences made with- and without APEX PhysX enabled.

We begin with a scene from the Mafia II benchmark test, which has the player pinned down behind a brick column as the enemy shoots at him. Examine the image below, which was taken with a Radeon HD 5850 configured with all settings turned to their highest and APEX PhysX support disabled:

No PhysX = Cloth Blending and Missing Debris

Notice from the image above that when PhysX is disabled there is no broken stone debris on the ground. Cloth from foreground character's trench coat blends into his leg and remains in a static position relative to his body, as does the clothing on other (AI) characters. Now inspect the image below, which uses the GeForce GTX 460 with APEX PhysX enabled:

Realistic Cloth and Debris - High Quality Settings With PhysX

With APEX PhysX enabled, the cloth neatly sways with the contour of a characters body, and doesn't bleed into solid objects such as body parts. Additionally, APEX Clothing features improve realism by adding gravity and wind effects onto clothing, allowing for characters to look like they would in similar real-world environments.

Burning Destruction Smoke and Vapor Realism

Flames aren't exactly new to video games, but smoke plumes and heat vapor that mimic realistic movement have never looked as real as they do with APEX Turbulence. Fire and explosions added into a destructible environment is a potent combination for virtual-world mayhem, showcasing the new PhysX APEX Destruction feature.

Exploding Glass Shards and Bursting Flames

NVIDIA PhysX has changed video game explosions into something worthy of cinema-level special effects. Bursting windows explode into several unique shards of glass, and destroyed crates bust into splintered kindling. Smoke swirls and moves as if there's an actual air current, and flames move out towards open space all on their own. Surprisingly, there is very little impact on FPS performance with APEX PhysX enabled on GeForce video cards, and very little penalty for changing from medium (normal) to high settings.

NVIDIA 3D-Vision Effects

Readers familiar with Benchmark Reviews have undoubtedly heard of NVIDIA GeForce 3D Vision technology; if not from our review of the product, then for the Editor's Choice Award it's earned or the many times I've personally mentioned it in out articles. Put simply: it changes the game. 2010 has been a break-out year for 3D technology, and PC video games are leading the way. Mafia II is expands on the three-dimensional effects, and improves the 3D-Vision experience with out-of-screen effects. For readers unfamiliar with the technology, 3D-Vision is a feature only available to NVIDIA GeForce video cards.

The first thing gamers should be aware of is the performance penalty for using 3D-Vision with a high-demand game like Mafia II. Using a GeForce GTX 480 video card for reference, currently the most powerful single-GPU graphics solution available, we experienced frame rate speeds up to 33 FPS with all settings configured to their highest and APEX PhysX set to high. However, when 3D Vision is enabled the video frame rate usually decrease by about 50%. This is no longer the hardfast rule, thanks to '3D Vision Ready' game titles that offer performance optimizations. Mafia II proved that the 3D Vision performance penalty can be as little as 30% with a single GeForce GTX 480 video card, or a mere 11% in SLI configuration. NVIDIA Forceware drivers will guide players to make custom-recommended adjustments specifically for each game they play, but PhysX and anti-aliasing will still reduce frame rate performance.

Of course, the out-of-screen effects are worth every dollar you spend on graphics hardware. In the image above, an explosion sends the car's wheel and door flying into the players face, followed by metal debris and sparks. When you're playing, this certainly helps to catch your attention... and when the objects become bullets passing by you, the added depth of field helps assist in player awareness.

Combined with APEX PhysX technology, NVIDIA's 3D-Vision brings destructible walls to life. As enemies shoot at the brick column, dirt and dust fly past the player forcing stones to tumble out towards you. Again, the added depth of field can help players pinpoint the origin of enemy threat, and improve response time without sustaining 'confusion damage'.

NVIDIA APEX Turbulence, a new PhysX feature, already adds an impressive level of realism to games (such as with Mafia II pictured in this section). Watching plumes of smoke and flames spill out towards your camera angle helps put you right into the thick of action.

NVIDIA 3D-Vision/3D-Vision Surround is the perfect addition to APEX PhysX technology, and capable video games will prove that these features reproduce lifelike scenery and destruction when they're used together. Glowing embers and fiery shards shooting past you seem very real when 3D-Vision pairs itself APEX PhysX technology, and there's finally a good reason to overpower the PCs graphics system.

GeForce GTS 450 Temperatures

Benchmark tests are always nice, so long as you care about comparing one product to another. But when you're an overclocker, gamer, or merely a PC hardware enthusiast who likes to tweak things on occasion, there's no substitute for good information. Benchmark Reviews has a very popular guide written on Overclocking Video Cards, which gives detailed instruction on how to tweak a graphics cards for better performance. Of course, not every video card has overclocking head room. Some products run so hot that they can't suffer any higher temperatures than they already do. This is why we measure the operating temperature of the video card products we test.

To begin my testing, I use GPU-Z to measure the temperature at idle as reported by the GPU. Next I use FurMark's "Torture Test" to generate maximum thermal load and record GPU temperatures at high-power 3D mode. The ambient room temperature remained at a stable 20°C throughout testing. FurMark does two things extremely well: drive the thermal output of any graphics processor higher than applications of video games realistically could, and it does so with consistency every time. Furmark works great for testing the stability of a GPU as the temperature rises to the highest possible output. The temperatures discussed below using FurMark are absolute maximum values, and not representative of real-world performance.

NVIDIA GeForce GTS 450 1GB Video Card Temperatures

Maximum GPU thermal thresholds have varied between Fermi GPUs. The GF100 graphics processor (GTX 480/470/465) could withstand 105°C, while the GF104 was a little more sensitive at 104°C. The GF106 apparently suffers from heat intolerance, and begins to downclock the GeForce GTS 450 graphics processor at 95°C. Thankfully, our tests on two different GeForce GTS 450 products indicate that these video cards operate at stone cold temperatures in comparison. Sitting idle at the Windows 7 desktop with a 20°C ambient room temperature, the GeForce GTS 450 rested silently at 29°C. After roughly ten minutes of torture using FurMark's stress test, fan noise was minimal and temperatures plateau around 65°C.

Most new graphics cards from NVIDIA and ATI will expel heated air out externally through exhaust vents, which does not increase the internal case temperature. Our test system is an open-air chassis that allows the video card to depend on its own cooling solution for proper thermal management. Most gamers and PC hardware enthusiasts who use an aftermarket computer case with intake and exhaust fans will usually create a directional airflow current and lower internal temperatures a few degrees below the measurements we've recorded. To demonstrate this, we've built a system to illustrate the...

Best-Case Scenario

Traditional tower-style computer cases position internal hardware so that heat is expelled out through the back of the unit while modern video cards reduce operating temperature with active cooling solutions. This is better than nothing, but there's a fundamental problem: heat rises. Using the transverse mount design on the SilverStone Raven-2 chassis, Benchmark Reviews re-tested the NVIDIA GeForce GTS 450 video card to determine the 'best-case' scenario.

Positioned vertically, the GeForce GTS 450 rested at 29°C, which is identical to temperatures measured in a regular computer case. Pushed to abnormally high levels using the FurMark torture test, NVIDIA's GeForce GTS 450 operated at 66°C, one degree higher than a standard tower case. After some investigation, it seems that the reference thermal cooling solution is better suited to a horizontal orientation. Although the well-designed Raven-2 computer case offers additional cooling features and has helped to make a difference in other video cards, namely the GeForce GTX 480 and SLI sets, this wasn't the case for GTS 450... not that it matters with temperatures this low.

In the traditional (horizontal) position, the slightly angled heat-pipe rods use gravity and sintering to draw cooled liquid back down to the base. When positioned in a transverse mount case such as the SilverStone Raven-2, the NVIDIA GeForce GTS 450 heatsink loses optimal effective properties in the lowest heat-pipe rod, because gravity takes keeps the cool liquid in the lowest portion of the rod within the finsink.

VGA Power Consumption

Life is not as affordable as it used to be, and items such as gasoline, natural gas, and electricity all top the list of resources which have exploded in price over the past few years. Add to this the limit of non-renewable resources compared to current demands, and you can see that the prices are only going to get worse. Planet Earth is needs our help, and needs it badly. With forests becoming barren of vegetation and snow capped poles quickly turning brown, the technology industry has a new attitude towards turning "green". I'll spare you the powerful marketing hype that gets sent from various manufacturers every day, and get right to the point: your computer hasn't been doing much to help save energy... at least up until now.

For power consumption tests, Benchmark Reviews utilizes the 80-PLUS GOLD certified OCZ Z-Series Gold 850W PSU, model OCZZ850. This power supply unit has been tested to provide over 90% typical efficiency by Chroma System Solutions, however our results are not adjusted for consistency. To measure isolated video card power consumption, Benchmark Reviews uses the Kill-A-Watt EZ (model P4460) power meter made by P3 International.

A baseline test is taken without a video card installed inside our test computer system, which is allowed to boot into Windows-7 and rest idle at the login screen before power consumption is recorded. Once the baseline reading has been taken, the graphics card is installed and the system is again booted into Windows and left idle at the login screen. Our final loaded power consumption reading is taken with the video card running a stress test using FurMark. Below is a chart with the isolated video card power consumption (not system total) displayed in Watts for each specified test product:

VGA Product Description(sorted by combined total power) |

Idle Power |

Loaded Power |

|---|---|---|

NVIDIA GeForce GTX 480 SLI Set |

82 W |

655 W |

NVIDIA GeForce GTX 590 Reference Design |

53 W |

396 W |

ATI Radeon HD 4870 X2 Reference Design |

100 W |

320 W |

AMD Radeon HD 6990 Reference Design |

46 W |

350 W |

NVIDIA GeForce GTX 295 Reference Design |

74 W |

302 W |

ASUS GeForce GTX 480 Reference Design |

39 W |

315 W |

ATI Radeon HD 5970 Reference Design |

48 W |

299 W |

NVIDIA GeForce GTX 690 Reference Design |

25 W |

321 W |

ATI Radeon HD 4850 CrossFireX Set |

123 W |

210 W |

ATI Radeon HD 4890 Reference Design |

65 W |

268 W |

AMD Radeon HD 7970 Reference Design |

21 W |

311 W |

NVIDIA GeForce GTX 470 Reference Design |

42 W |

278 W |

NVIDIA GeForce GTX 580 Reference Design |

31 W |

246 W |

NVIDIA GeForce GTX 570 Reference Design |

31 W |

241 W |

ATI Radeon HD 5870 Reference Design |

25 W |

240 W |

ATI Radeon HD 6970 Reference Design |

24 W |

233 W |

NVIDIA GeForce GTX 465 Reference Design |

36 W |

219 W |

NVIDIA GeForce GTX 680 Reference Design |

14 W |

243 W |

Sapphire Radeon HD 4850 X2 11139-00-40R |

73 W |

180 W |

NVIDIA GeForce 9800 GX2 Reference Design |

85 W |

186 W |

NVIDIA GeForce GTX 780 Reference Design |