| NVIDIA GeForce GTX 480 Fermi Video Card |

| Reviews - Featured Reviews: Video Cards | |||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

| Written by Olin Coles | |||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

| Friday, 26 March 2010 | |||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

NVIDIA GeForce GTX 480 Video Card ReviewPC video game enthusiasts have depended on two companies to deliver graphics power for their computer system: NVIDIA and ATI. While it's convenient for NVIDIA to enjoy fan favoritism for the past decade, ATI has recently enjoyed strong sales and a decisive head-start on the growing DirectX-11 consumer market over the past six months (as evidenced by our unbelievably long list of recent video card reviews). The ATI Radeon HD 5000 series has earned AMD new respect, but many inside the industry have impatiently waited on NVIDIA to respond with their fabled GF100 Fermi DX11 architecture. At long last, NVIDIA's Fermi is a reality. At the center of every new technology is purpose, and NVIDIA has designed their Fermi GF100 GPU with an end-goal of redefining the video game experience through significant graphics processor innovations. Disruptive technology often changes the way users interact with computers, and the GeForce GTX-480 graphics card is a complex tool built to arrive at one simple destination: immersive entertainment. Priced at $499, the NVIDIA GeForce GTX 480 empowers DirectX-11 video games to deliver unmatched geometric realism. In this article Benchmark Reviews tests 3D frame rate performance of NVIDIA's GeForce GTX 480, and demonstrates how well Fermi architecture fits in with GeForce 3D Vision.

NVIDIA GeForce GTX 480 Video CardTSMC, the largest semiconductor foundry on the planet, has had a great deal of difficulty shrinking their business. Originally intended to feature 512 CUDA cores, NVIDIA was faced with limited yields from TSMC, and decided to end the consumers wait and offer gamers and PC hardware enthusiasts a 480-core solution. Some readers may recall that AMD reacted to their yield crisis differently, and decided it would look better to announce a product with extremely-limited quantities. While this tactic works well for review samples and a pretty press release, NVIDIA knows that gamers want to actually own the video card... not just read about it. NVIDIA presents to us the GeForce GTX-480 graphics card. Powered by 48 ROPs and 480 unified CUDA (shader) cores, the GF100 Fermi GPU has 3.2-billion transistors to help process DirectX-11 commands and render some of the most detailed graphics ever seen on the PC platform. Tessellation is the word for 2010, and DX11 brings movie-quality graphics to life on consumer-level video games. Benchmark Reviews tests graphics frame rate performance of the NVIDIA GeForce GTX 480 using several of the most demanding PC video game titles and benchmark software available. Old favorites such as Crysis Warhead, Far Cry 2, Resident Evil 5, and PCMark Vantage are all included. New to the scene are Battlefield: Bad Company 2, Stalker: Call of Pripyat, BattleForge, and the recently announced Unigine Heaven 2.0 benchmark. EDITOR'S NOTE: Since testing NVIDIA's engineering sample for this article, we've received retail GeForce GTX-480 products that perform the same but require less power and produce less heat and noise. Read more in our Zotac GeForce GTX-480 Fermi Video Card review, which also includes SLI performance results. About NVIDIA Corporation:

NVIDIA (Nasdaq: NVDA) is the world leader in visual computing technologies and the inventor of the GPU, a high-performance processor which generates breathtaking, interactive graphics on workstations, personal computers, game consoles, and mobile devices. NVIDIA serves the entertainment and consumer market with its GeForce products, the professional design and visualization market with its Quadro products, and the high-performance computing market with its Tesla products. These products are transforming visually-rich and computationally-intensive applications such as video games, film production, broadcasting, industrial design, financial modeling, space exploration, and medical imaging. NVIDIA Product Lines

GeForce - GPUs dedicated to graphics and video.

Quadro - A complete range of professional solutions engineered to deliver breakthrough performance and quality.

Tesla - A massively-parallel multi-threaded architecture for high-performance computing problems. TessellationIn today's complex graphics, tessellation offers the means to store massive amounts of coarse geometry, with expand-on-demand functionality. In the NVIDIA GF100 GPU, tessellation also enables more complex animations. In terms of model scalability, dynamic Level of Detail (LOD) allows for quality and performance trade-offs whenever it can deliver better picture quality over performance without penalty. Comprised of three layers (original geometry, tessellation geometry, and displacement map), the final product is far more detailed in shade and data-expansion than if it were constructed with bump-map technology. In plain terms, tessellation gives the peaks and valleys with shadow detail in-between, while previous-generation technology (bump-mapping) would give the illusion of detail.

Using GPU-based tessellation, a game developer can send a compact geometric representation of an object or character and the tessellation unit can produce the correct geometric complexity for the specific scene. Consider the "Imp" character illustrated above. On the far left we see the initial quad mesh used to model the general outline of the figure; this representation is quite compact even when compared to typical game assets. The two middle images of the character are created by finely tessellating the description at the left. The result is a very smooth appearance, free of any of the faceting that resulted from limited geometry. Unfortunately this character, while smooth, is no more detailed than the coarse mesh. The final image on the right was created by applying a displacement map to the smoothly tessellated third character to the left. Tessellation in DirectX-11Control hull shaders run DX11 pre-expansion routines, and operates explicitly in parallel across all points. Domain shaders run post-expansion operations on maps (u/v or x/y/z/w) and is also implicitly parallel. Fixed function tessellation is configured by Level of Detail (LOD) based on output from the control hull shader, and can also produce triangles and lines if requested. Tessellation is something that is new to NVIDIA GPUs, and was not part of GT200 because of geometry bandwidth bottlenecks from sequential rendering/execution semantics. In regard to the GF100 graphics processor, NVIDIA has added a new PolyMorph and Raster engines to handle world-space processing (PolyMorph) and screen-space processing (Raster). There are sixteen PolyMorph engines and four Raster engines on the GF100, which depend on an improved L2 cache to keep buffered geometric data produced by the pipeline on-die. Four-Offset Gather4The texture unit on previous processor architectures operated at the core clock of the GPU. On GF100, the texture units run at a higher clock, leading to improved texturing performance for the same number of units. GF100's texture units now add support for DirectX-11's BC6H and BC7 texture compression formats, reducing the memory footprint of HDR textures and render targets. The texture units also support jittered sampling through DirectX-11's four-offset Gather4 feature, allowing four texels to be fetched from a 128×128 pixel grid with a single texture instruction. NVIDIA's GF100 implements DirectX-11 four-offset Gather4 in hardware, greatly accelerating shadow mapping, ambient occlusion, and post processing algorithms. With jittered sampling, games can implement smoother soft shadows or custom texture filters efficiently. The previous GT200 GPU did not offer coverage samples, while the GF100 can deliver 32x CSAA. GF100 Compute for GamingAs developers continue to search for novel ways to improve their graphics engines, the GPU will need to excel at a diverse and growing set of graphics algorithms. Since these algorithms are executed via general compute APIs, a robust compute architecture is fundamental to a GPU's graphical capabilities. In essence, one can think of compute as the new programmable shader. GF100's compute architecture is designed to address a wider range of algorithms and to facilitate more pervasive use of the GPU for solving parallel problems. Many algorithms, such as ray tracing, physics, and AI, cannot exploit shared memory-program memory locality is only revealed at runtime. GF100's cache architecture was designed with these problems in mind. With up to 48 KB of L1 cache per Streaming Multiprocessor (SM) and a global L2 cache, threads that access the same memory locations at runtime automatically run faster, irrespective of the choice of algorithm. NVIDIA Codename NEXUS brings CPU and GPU code development together in Microsoft Visual Studio 2008 for a shared process timeline. NEXUS also introduces the first hardware-based shader debugger. NVIDIA's GF100 is the first GPU to ever offer full C++ support, the programming language of choice among game developers. To ease the transition to GPU programming, NVIDIA developed Nexus, a Microsoft Visual Studio programming environment for the GPU. Together with new hardware features that provide better debugging support, developers will be able enjoy CPU-class application development on the GPU. The end results is C++ and Visual Studio integration that brings HPC users into the same platform of development. NVIDIA offers several paths to deliver compute functionality on the GF100 GPU, such as CUDA C++ for video games. Image processing, simulation, and hybrid rendering are three primary functions of GPU compute for gaming. Using NVIDIA's GF100 GPU, interactive ray tracing becomes possible for the first time on a standard PC. Ray tracing performance on the NVIDIA GF100 is roughly 4x faster than it was on the GT200 GPU, according to NVIDIA tests. AI/path finding is a compute intensive process well suited for GPUs. The NVIDIA GF100 can handle AI obstacles approximately 3x better than on the GT200. Benefits from this improvement are faster collision avoidance and shortest path searches for higher-performance path finding. GF100 Specifications

GeForce Specifications

NVIDIA GF100 GPU Fermi ArchitectureNVIDIA's latest GPU is codenamed GF100, and is the first graphics processor based on the Fermi architecture. In this article, Benchmark Reviews explains the technical architecture behind NVIDIA's GF100 graphics processor and offers an insight into upcoming Fermi-based GeForce video cards. For those who are not familiar, NVIDIA's GF100 GPU is their first graphics processor to support DirectX-11 hardware features such as tessellation and DirectCompute, while also adding heavy particle and turbulence effects. The GF100 GPU is also the successor to the GT200 graphics processor, which launched in the GeForce GTX 280 video card back in June 2008. NVIDIA has since redefined their focus, and GF100 proves a dedication towards next generation gaming effects such as raytracing, order-independent transparency, and fluid simulations. Rest assured, the new GF100 GPU is more powerful than the GT200 could ever be, and early results indicate a Fermi-based video card delivers far more than twice the gaming performance over a GeForce GTX-280. GF100 is not another incremental GPU step-up like we had going from G80 to GT200. While processor cores have grown from 128 (G80) and 240 (GT200), they now reach 512 and earn the title of NVIDIA CUDA (Compute Unified Device Architecture) cores. The key here is not only the name, but that the name now implies an emphasis on something more than just graphics. Each Fermi CUDA processor core has a fully pipelined integer arithmetic logic unit (ALU) and floating point unit (FPU). GF100 implements the new IEEE 754-2008 floating-point standard, providing the fused multiply-add (FMA) instruction for both single and double precision arithmetic. FMA improves over a multiply-add (MAD) instruction by doing the multiplication and addition with a single final rounding step, with no loss of precision in the addition. FMA minimizes rendering errors in closely overlapping triangles.

Based on Fermi's third-generation Streaming Multiprocessor (SM) architecture, GF100 doubles the number of CUDA cores over the previous architecture. NVIDIA GeForce GF100 Fermi GPUs are based on a scalable array of Graphics Processing Clusters (GPCs), Streaming Multiprocessors (SMs), and memory controllers. The NVIDIA GF100 implements four GPCs, sixteen SMs, and six memory controllers. Expect NVIDIA to launch GF100 products with different configurations of GPCs, SMs, and memory controllers to address different price points. CPU commands are read by the GPU via the Host Interface. The GigaThread Engine fetches the specified data from system memory and copies them to the frame buffer. GF100 implements six 64-bit GDDR5 memory controllers (384-bit total) to facilitate high bandwidth access to the frame buffer. The GigaThread Engine then creates and dispatches thread blocks to various SMs. Individual SMs in turn schedules warps (groups of 32 threads) to CUDA cores and other execution units. The GigaThread Engine also redistributes work to the SMs when work expansion occurs in the graphics pipeline, such as after the tessellation and rasterization stages. GF100 implements 512 CUDA cores, organized as 16 SMs of 32 cores each. Each SM is a highly parallel multiprocessor supporting up to 48 warps at any given time. Each CUDA core is a unified processor core that executes vertex, pixel, geometry, and compute kernels. A unified L2 cache architecture services load, store, and texture operations. GF100 has 48 ROP units for pixel blending, antialiasing, and atomic memory operations. The ROP units are organized in six groups of eight. Each group is serviced by a 64-bit memory controller. The memory controller, L2 cache, and ROP group are closely coupled-scaling one unit automatically scales the others. NVIDIA GigaThread Thread SchedulerOne of the most important technologies of the Fermi architecture is its two-level, distributed thread scheduler. At the chip level, a global work distribution engine schedules thread blocks to various SMs, while at the SM level, each warp scheduler distributes warps of 32 threads to its execution units. The first generation GigaThread engine introduced in G80 managed up to 12,288 threads in real-time. The Fermi architecture improves on this foundation by providing not only greater thread throughput, but dramatically faster context switching, concurrent kernel execution, and improved thread block scheduling. What's new in Fermi?With any new technology, consumers want to know what's new in the product. The goal of this article is to share in-depth information surrounding the Fermi architecture, as well as the new functionality unlocked in GF100. For clarity, the 'GF' letters used in the GF100 GPU name are not an abbreviation for 'GeForce'; they actually denote that this GPU is a Graphics solution based on the Fermi architecture. The next generation of NVIDIA GeForce-series desktop video cards will use the GF100 to promote the following new features:

Benchmark Reviews also more detail in our full-length NVIDIA GF100 GPU Fermi Graphics Architecture guide. Closer Look: GeForce GTX480So far, 2010 has been an exciting year for game developers. Microsoft Windows 7 introduced gamers to DirectX-11, and video games released for the PC platform have looked better than ever. DirectX-11 is the leap in video game software development we've been waiting for. Screen Space Ambient Occlusion (SSAO) is given emphasis in DX11, allowing some of the most detailed computer textures gamers have ever seen. Realistic cracks in mud with definable depth and splintered tree bark make the game more realistic, but they also make new demands on the graphics hardware. This new level of graphical detail requires a new level of computer hardware: DX11-compliant hardware. Tessellation adds a tremendous level of strain on the GPU, making previous graphics hardware virtually obsolete with new DX11 game titles.

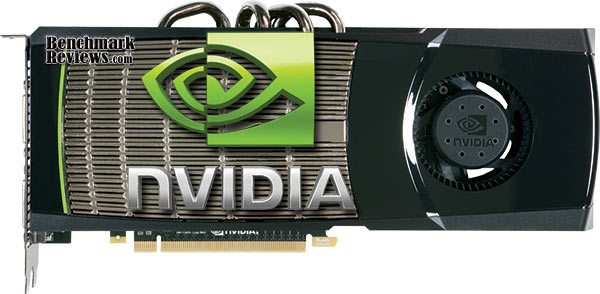

The NVIDIA GeForce GTX480 replaces the GeForce GTX285 as their most powerful single-GPU desktop graphics card. Assuming the same double-bay card height and 10.5" long profile, the GeForce GTX480 adds a more robust thermal management system with five heatpipes (four exposed) transferring heat from the GF100 GPU to an exposed heatsink. Video frame buffer memory specifications change with the GTX480. The 512-bit memory interface of the GTX285 is replaced by a 384-bit version, which features 1536MB of GDDR5 vRAM operating at 924/3696 MHz clock and data rates.

Other changes occur in more subtle ways, such as with the device header panel. While keeping to the traditional design used for GeForce 200-series products, the GTX 480 simply replaces the S-Video connection with a more relevant HDMI 1.3a A/V output. NVIDIA has retained dual DVI output, which means that at least two GeForce video cards will be needed for a GeForce 3D-Vision Surround functionality. As with past GeForce video cards, the GPU offers two output 'lanes', so all three output devices cannot operate at once.

The new 40nm fabrication process opens the die for more transistors, now increased from 1.4-billion in GT200 GPU present on the GeForce GTX 285, to an astounding 3.2-billion built into the Fermi GF100 GPU and used with the GeForce GTX480 (and also the GTX470). The increased transistor count also amplifies the thermal output, which NVIDIA estimates at 250 watts TDP.

One particular difference between the GTX285 and the GTX480 is heat. While the exposed heatsink does well-enough to remove a portion of heat from the 700MHz GPU, the exhaust vents begin to expel hot air from initial start-up. The GF100 GPU is located closer to the exhaust panel than GT200 was, and although the heatsink and heat-pipes are improved the operating temperature runs very warm even at idle. We explore operating temperatures later in this article. Although designed for 250W, the 6+8-pin power connections are good for up to 400W on demand... which is close to what we received for full-load power consumption results. Keep this in mind when shopping for a power supply unit; NVIDIA recommends a 600W PSU for the GTX480, but 800W would be a safer suggestion.

Similar to the GeForce GTX285, both the GTX470 and GTX480 offer triple-SLI capability. Technically SLI and triple-SLI are possible, but careful consideration for heat must be given to the GTX480. Under load this video card nearly reached 100°C in a 25°C room; and that's with an extremely well-ventilated computer case helping to keep it cool. Also note the opened PCB, which allows the blower fan to intake air from either side of the unit. The printed circuit board (PCB) is a busy place for the GeForce GTX480. Many of the electronic components have been located to the 'top' side of the PCB, so to better accommodate the fully-grown 530 mm2 GF100 GPU and its 3.2-billion transistors. 480 CUDA cores operate at 1401 MHz, which keeps a firm lead over ATI's 850 MHz Cypress-XT GPU that measures 334 mm2 and fits 2.154-billion transistors.

In next several sections, Benchmark Reviews explains our video card test methodology, followed by a performance comparison of the NVIDIA GeForce GTX480 against several of the most popular graphics accelerators available. The GeForce GTX480 replaces the GeForce GTX 285, and directly competes against the ATI Radeon HD 5870; so we'll be keeping a close eye on comparative performance. VGA Testing MethodologyThe Microsoft DirectX-11 graphics API is native to the Microsoft Windows 7 Operating System, and will be the primary O/S for our test platform. DX11 is also available as a Microsoft Update to the Vista O/S, so our test results apply to both versions of the Operating System. Because not all graphics solutions were DX11 compatible at the time this article was published, DirectX-10 test settings have been included beside DirectX-11 results.

According to the Steam Hardware Survey published at the time of Windows 7 launch, the most popular gaming resolution is 1280x1024 (17-19" standard LCD monitors) closely followed by 1024x768 (15-17" standard LCD). However, because these resolutions are considered 'low' by most standards, our benchmark performance tests concentrate on the up-and-coming higher-demand resolutions: 1680x1050 (22-24" widescreen LCD) and 1920x1200 (24-28" widescreen LCD monitors). These resolutions are more likely to be used by high-end graphics solutions, such as those tested in this article. In each benchmark test there is one 'cache run' that is conducted, followed by five recorded test runs. Results are collected at each setting with the highest and lowest results discarded. The remaining three results are averaged, and displayed in the performance charts on the following pages. A combination of synthetic and video game benchmark tests have been used in this article to illustrate relative performance among graphics solutions. Our benchmark frame rate results are not intended to represent real-world graphics performance, as this experience would change based on supporting hardware and the perception of individuals playing the video game. Intel X58-Express Test System

Benchmark Applications

Video Card Test Products

3DMark Vantage GPU Tests3DMark Vantage is a PC benchmark suite designed to test the DirectX10 graphics card performance. FutureMark 3DMark Vantage is the latest addition the 3DMark benchmark series built by FutureMark corporation. Although 3DMark Vantage requires NVIDIA PhysX to be installed for program operation, only the CPU/Physics test relies on this technology. 3DMark Vantage offers benchmark tests focusing on GPU, CPU, and Physics performance. Benchmark Reviews uses the two GPU-specific tests for grading video card performance: Jane Nash and New Calico. These tests isolate graphical performance, and remove processor dependence from the benchmark results. 3DMark Vantage GPU Test: Jane NashOf the two GPU tests 3DMark Vantage offers, the Jane Nash performance benchmark is slightly less demanding. In a short video scene the special agent escapes a secret lair by water, nearly losing her shirt in the process. Benchmark Reviews tests this DirectX-10 scene at 1680x1050 and 1920x1200 resolutions, and uses Extreme quality settings with 8x anti-aliasing and 16x anisotropic filtering. The 1:2 scale is utilized, and is the highest this test allows. By maximizing the processing levels of this test, the scene creates the highest level of graphical demand possible and sorts the strong from the weak.

Looking at 3dMark Vantage performance, there are some trends forming. The Radeon HD4890 and GeForce GTX275 are similarly matched, and generally perform at the low end of our chart. The factory-overclocked GeForce GTX285 is overshadowed by the Radeon HD5850, while the Radeon HD5870 tops the charts for single-GPU performance. NVIDIA's GeForce GTX trails the HD5870 by less than one FPS in the Jane Nash tests, and leads the GTX285 by 47%. NVIDIA's dual-GPU GeForce GTX295 is no match for the ATI Radeon HD5970, but one GF100 GPU easily beats two GT200's. 3DMark Vantage GPU Test: New CalicoNew Calico is the second GPU test in the 3DMark Vantage test suite. Of the two GPU tests, New Calico is the most demanding. In a short video scene featuring a galactic battleground, there is a massive display of busy objects across the screen. Benchmark Reviews tests this DirectX-10 scene at 1680x1050 and 1920x1200 resolutions, and uses Extreme quality settings with 8x anti-aliasing and 16x anisotropic filtering. The 1:2 scale is utilized, and is the highest this test allows. Using the highest graphics processing level available allows our test products to separate themselves and stand out (if possible).

Similar to the Jane Nash tests, the New Calico tests in 3dMark Vantage have the Radeon HD4890 and GeForce GTX275 evenly matched. The Radeon HD5850 still trumps the GeForce GTX285, but in this test the GeForce GTX480 outperforms the Radeon HD5870 and all other single-GPU video cards. For reference, the GeForce GTX480 trailed behind the dual-GPU Radeon HD5970 by 32-51%. Test Summary: GeForce GTX480 improves upon the GTX285 by nearly 61% at 1920x1200, and outperforms the Radeon HD5870 by 10%. The recent 1.02 version update and fresh Forceware/Catalyst drivers do not appear to effect 3dMark Vantage, and results indicate that this is still a balanced tool for measuring DX10 graphics performance.

Battlefield: Bad Company 2The Battlefield franchise has been known to demand a lot from PC graphics hardware. DICE (Digital Illusions CE) has incorporated their Frostbite-1.5 game engine with Destruction-2.0 feature set with Battlefield: Bad Company 2. Battlefield: Bad Company 2 features destructible environments using Frostbit Destruction-2.0, and adds gravitational bullet drop effects for projectiles shot from weapons at a long distance. The Frostbite-1.5 game engine used on Battlefield: Bad Company 2 consists of DirectX-10 primary graphics, with improved performance and softened dynamic shadows added for DirectX-11 users. At the time Battlefield: Bad Company 2 was published, DICE was also working on the Frostbite-2.0 game engine. This upcoming engine will include native support for DirectX-10.1 and DirectX-11, as well as parallelized processing support for 2-8 parallel threads. This will improve performance for users with an Intel Core-i7 processor. Unfortunately, the Extreme Edition Intel Core i7-980X six-core CPU with twelve threads will not see full utilization. In our benchmark tests of Battlefield: Bad Company 2, the first three minutes of action in the single-player raft night scene are captured with FRAPS. Relative to the online multiplayer action, these frame rate results are nearly identical to daytime maps with the same video settings.

The Frostbite-1.5 game engine in Battlefield: Bad Company 2 appears to equalize our test set of video cards, and despite AMD's sponsorship of the game it still plays well using any brand of graphics card. There's a noticeable lead for the GeForce GTX275 over the Radeon HD4890, and the GeForce GTX285 is much closer to the Radeon HD5850 and HD5870. The NVIDIA GeForce GTX480 leads the Radeon HD5870 by 23%, and improves upon the GTX285 by 58%. Surprisingly, there's only a 15% lead over the GTX480 with the dual-GPU Radeon HD5970. Test Summary: In Battlefield: Bad Company 2, the GeForce GTX480 improves upon the GTX285 by nearly 59% while beating the ATI Radeon HD5870 by 23%. Additionally, only 9 FPS separate the GTX480 and the dual-GPU Radeon HD4970.

BattleForge PerformanceBattleForge is free Massive Multiplayer Online Role Playing Game (MMORPG) developed by EA Phenomic with DirectX-11 graphics capability. Combining strategic cooperative battles, the community of MMO games, and trading card gameplay, BattleForge players are free to put their creatures, spells and buildings into combination's they see fit. These units are represented in the form of digital cards from which you build your own unique army. With minimal resources and a custom tech tree to manage, the gameplay is unbelievably accessible and action-packed. Benchmark Reviews uses the built-in graphics benchmark to measure performance in BattleForge, using Very High quality settings (detail) and 8x anti-aliasing with auto multi-threading enabled. BattleForge is one of the first titles to take advantage of DirectX-11 in Windows 7, and offers a very robust color range throughout the busy battleground landscape. The first chart illustrates how performance measures-up between video cards when Screen Space Ambient Occlusion (SSAO) is disabled, which runs tests at DirectX-10 levels.

When Screen Space Ambient Occlusion (SSAO) is disabled, past-generation NVIDIA GeForce and ATI Radeon products are compared on a more even playing field (so long as you discredit the fact that we have a few DirectX-10 cards in the mix, and that BattleForge is a DirectX-11 game). These tests illustrate how well new DX11-compliant video cards improve upon previously-popular DX10 graphics solutions. Looking at performance using 1920x1200 resolution, the ATI Radeon HD5890 is slightly ahead of the GeForce GTX275, and the Radeon HD5850 is ahead of the overclocked ASUS GeForce GTX 285 TOP. The Radeon HD 5870 is a few FPS ahead of the GeForce GTX295 dual-GT200 video card. NVIDIA's Fermi-based GeForce GTX480 delivers better graphics performance than every other video card on the planet, and the 82.5 FPS outperforms the dual-GPU ATI Radeon HD5970 by 18% at 1920x1200 or 20% at 1680x1050. The next chart (below) illustrates how BattleForge reacts when SSAO is enabled, which forces multi-core optimizations that DirectX-11-compatible video cards are best suited to handle:

As should expected, the DirectX-11-compatible ATI 5000 reveals an immediate advantage over all previous-generation NVIDIA GeForce products. SSAO isn't a technology that DX10 GeForce products can handle very well, yet the older ATI Radeon products seem to work well enough with the new strain of DX11. If gaming is the primary purpose for a discrete graphics card, then you'll want to consider that nearly all new video games coming to market will be developed with SSAO and other DirectX-11 extensions. These features make it difficult (and sometimes impossible) to enjoy the game on non-compliant graphics hardware. In respect to EA's BattleForge, a reference-clocked ATI Radeon HD4890 is able to outperform the GeForce GTX275, and overclocked ASUS GeForce GTX285 TOP, and nearly match the GeForce GTX295. This indicates that Windows 7 will re-center the definition of 'mainstream' graphics products. What was top shelf in Windows XP will soon become the low end with DirectX-11 in Windows 7 or Vista. For gamers who plan to use Windows 7, and especially those who play BattleForge, the Radeon HD 5850 offered excellent performance, as did the HD5970 and dual-GPU Radeon HD5970, but it was the NVIDIA GeForce GTX480 that deserves total respect. Test Summary: With an unbiased appetite for raw DirectX-11 graphics performance, BattleForge appears to be ambiguous towards ATI and NVIDIA products. When high-strain SSAO is called into action, NVIDIA's GTX480 demonstrates how well Fermi is suited for DX11... improving upon the GeForce GTX285 by nearly 249%. While trumping ATI's best single-GPU Radeon HD5870 by 61% is an impressive feat all by itself, outperforming a dual-GPU Radeon HD5970 by 11% is incredible by definition.

Crysis Warhead TestsCrysis Warhead is an expansion pack based on the original Crysis video game. Crysis Warhead is based in the future, where an ancient alien spacecraft has been discovered beneath the Earth on an island east of the Philippines. Crysis Warhead uses a refined version of the CryENGINE2 graphics engine. Like Crysis, Warhead uses the Microsoft Direct3D 10 (DirectX-10) API for graphics rendering. Benchmark Reviews uses the HOC Crysis Warhead benchmark tool to test and measure graphic performance using the Airfield 1 demo scene. This short test places a high amount of stress on a graphics card because of detailed terrain and textures, but also for the test settings used. Using the DirectX-10 test with Very High Quality settings, the Airfield 1 demo scene receives 4x anti-aliasing and 16x anisotropic filtering to create maximum graphic load and separate the products according to their performance. Using the highest quality DirectX-10 settings with 4x AA and 16x AF, only the most powerful graphics cards are expected to perform well in our Crysis Warhead benchmark tests. DirectX-11 extensions are not supported in Crysis: Warhead, and SSAO is not an available option.

Without SSAO support, Crysis becomes a battle of sheer graphics power. The Radeon HD4890 and GeForce GTX275 dance around 18 FPS at 1920x1200, and the Radeon HD5850 chases the 21 FPS marker with an overclocked ASUS GeForce GTX 285 TOP. But when it comes to top-tier graphics, the ATI Radeon HD5870 measures up to the GeForce GTX295 of yesteryear and not much more. At 33 FPS the NVIDIA GeForce GTX480 is ahead of the HD5870 by 22%, and trails the dual-GPU Radeon HD5970 by a mere 6 FPS (only four at 1680x1050). Test Summary: The CryENGINE2 graphics engine used in Crysis Warhead responds well to both ATI and NVIDIA products, with test results appearing identical to 3dMark Vantage. The NVIDIA GeForce GTX480 improves upon the GTX285 by 57%, and also beats the ATI Radeon HD5870 by 22%. With 33 FPS at 1920x1200, the GTX480 is a mere 6 FPS away from the dual-GPU ATI Radeon HD4970.

Far Cry 2 BenchmarkUbisoft has developed Far Cry 2 as a sequel to the original, but with a very different approach to game play and story line. Far Cry 2 features a vast world built on Ubisoft's new game engine called Dunia, meaning "world", "earth" or "living" in Farci. The setting in Far Cry 2 takes place on a fictional Central African landscape, set to a modern day timeline. The Dunia engine was built specifically for Far Cry 2, by Ubisoft Montreal development team. It delivers realistic semi-destructible environments, special effects such as dynamic fire propagation and storms, real-time night-and-day sun light and moon light cycles, dynamic music system, and non-scripted enemy A.I actions. The Dunia game engine takes advantage of multi-core processors as well as multiple processors and supports DirectX 9 as well as DirectX-10. Only 2 or 3 percent of the original CryEngine code is re-used, according to Michiel Verheijdt, Senior Product Manager for Ubisoft Netherlands. Additionally, the engine is less hardware-demanding than CryEngine 2, the engine used in Crysis. However, it should be noted that Crysis delivers greater character and object texture detail, as well as more destructible elements within the environment. For example; trees breaking into many smaller pieces and buildings breaking down to their component panels. Far Cry 2 also supports the amBX technology from Philips. With the proper hardware, this adds effects like vibrations, ambient colored lights, and fans that generate wind effects. There is a benchmark tool in the PC version of Far Cry 2, which offers an excellent array of settings for performance testing. Benchmark Reviews used the maximum settings allowed for DirectX-10 tests, with the resolution set to 1920x1200. Performance settings were all set to 'Very High', Render Quality was set to 'Ultra High' overall quality, 8x anti-aliasing was applied, and HDR and Bloom were enabled.

Although the Dunia engine in Far Cry 2 is slightly less demanding than CryEngine 2 engine in Crysis, the strain appears to be extremely close. In Crysis we didn't dare to test AA above 4x, whereas we used 8x AA and 'Ultra High' settings in Far Cry 2. The end effect was a separation between what is capable of maximum settings, and what is not. Using the short 'Ranch Small' time demo (which yields the lowest FPS of the three tests available), we noticed that there are very few products capable of producing playable frame rates with the settings all turned up. Inspecting the performance at 1920x1200 resolution, it appears that every graphics card we tested can handle higher quality settings and post-processing effects in Far Cry 2. Similar to Battlefield: Bad Company 2 and Crysis Warhead, Far Cry 2 delivers optimal performance on NVIDIA GeForce video cards over the ATI Radeon alternative... The Way It's Meant To Be Played. The Radeon HD4890 lags behind the GeForce GTX275, and the Radeon HD5850 only matches the overclocked ASUS GeForce GTX 285 TOP for a change. Obviously the results are leaning towards NVIDIA here, so be prepared for anything. The GeForce GTX480 outperforms every other graphics card sold in terms of Far Cry 2 performance, with healthy lead over the HD5870 and a few FPS ahead of the dual-GPU Radeon HD5970. Test Summary: The Dunia game engine appears preferential to NVIDIA products over ATI, and if you're one of the many gamers who like to play Far Cry 2 perhaps you should prefer them too. The new NVIDIA GeForce GTX480 improves over the past-generation GeForce GTX285 by nearly 76%, and beats the Radeon HD5870 by over 50%. The surprise here is a measured benefit of 2 FPS for the single GF100 graphics card over dual-GPUs in the Radeon HD5970.

Resident Evil 5 TestsBuilt upon an advanced version of Capcom's proprietary MT Framework game engine to deliver DirectX-10 graphic detail, Resident Evil 5 offers gamers non-stop action similar to Devil May Cry 4, Lost Planet, and Dead Rising. The MT Framework is an exclusive seventh generation game engine built to be used with games developed for the PlayStation 3 and Xbox 360, and PC ports. MT stands for "Multi-Thread", "Meta Tools" and "Multi-Target". Games using the MT Framework are originally developed on the PC and then ported to the other two console platforms. On the PC version of Resident Evil 5, both DirectX 9 and DirectX-10 modes are available for Microsoft Windows XP and Vista Operating Systems. Microsoft Windows 7 will play Resident Evil with backwards compatible Direct3D APIs. Resident Evil 5 is branded with the NVIDIA The Way It's Meant to be Played (TWIMTBP) logo, and receives NVIDIA GeForce 3D Vision functionality enhancements. NVIDIA and Capcom offer the Resident Evil 5 benchmark demo for free download from their website, and Benchmark Reviews encourages visitors to compare their own results to ours. Because the Capcom MT Framework game engine is very well optimized and produces high frame rates, Benchmark Reviews uses the DirectX-10 version of the test at 1920x1200 resolution. Super-High quality settings are configured, with 8x MSAA post processing effects for maximum demand on the GPU. Test scenes from Area #3 and Area #4 require the most graphics processing power, and the results are collected for the chart illustrated below.

Resident Evil 5 has really proved how well the proprietary Capcom MT Framework game engine can look with DirectX-10 effects. The Area 3 and 4 tests are the most graphically demanding from this free downloadable demo benchmark, but the results make it appear that the Area #3 test scene performs better with NVIDIA GeForce products compared to the Area #4 scene that favors ATI Radeon GPUs. Even so, the past-generation ATI Radeon HD4890 renders 44 FPS in test scene 3, while jumping to 58 FPS in test scene 4. This loosely indicates that lower-end graphics cards can still play Resident Evil 5 at 1920x1200, and produce good 30+ frame rates with maximum settings. For these results however, it seems that driver optimizations between manufacturers could account for the disparity among test scenes, although the Resident Evil 5 game itself 'normalizes' in the two other (less demanding) scenes. Many of the video card rankings changed between the two test scenes in Resident Evil 5. The Radeon HD5850 is 5 FPS ahead of NVIDA's GeForce GTX275 in area #3, and then falls 12 FPS behind in area #4. The inverse is true for the next video cards, where the GeForce GTX285 is ahead of the Radeon HD5870 and then drops behind by 16 FPS. The NVIDIA GeForce GTX480 dominates the entire field in area #3 results, and trails just behind the Radeon HD5970 in area #4. Test Summary: It's unclear if Resident Evil 5 graphics performance fancies ATI or NVIDIA, especially with two test scenes that alternate favoritism. Although this benchmark tool is distributed directly from NVIDIA, and Forceware drivers likely have optimizations written for the Resident Evil 5 game, there doesn't appear to be any decisive tilt towards GeForce products over Radeon counterparts from within the game itself. Test scene #3 certainly favors the GeForce GTX480, and leads it ahead of every other product tested. In test scene #4, the GTX480 scores 118 FPS compared to only 81 with the past-generation GTX285, or 97 FPS from the Radeon HD5870.

STALKER Call of Pripyat BenchmarkThe events of S.T.A.L.K.E.R.: Call of Pripyat unfold shortly after the end of S.T.A.L.K.E.R.: Shadow of Chernobyl. Having discovered about the open path to the Zone center, the government decides to hold a large-scale military "Fairway" operation aimed to take the CNPP under control. According to the operation's plan, the first military group is to conduct an air scouting of the territory to map out the detailed layouts of anomalous fields location. Thereafter, making use of the maps, the main military forces are to be dispatched. Despite thorough preparations, the operation fails. Most of the avant-garde helicopters crash. In order to collect information on reasons behind the operation failure, Ukraine's Security Service send their agent into the Zone center. S.T.A.L.K.E.R.: CoP is developed on X-Ray 1.6 game engine, and implements several ambient occlusion (AO) techniques including one that AMD has developed. AMD's AO technique is optimized to run on efficiently on Direct3D11 hardware. It has been chosen by a number of games (e.g. BattleForge, HAWX, or the new Aliens vs Predator) for the distinct effect in it adds to the final rendered images. This AO technique is called HDAO which stands for ‘High Definition Ambient Occlusion' because it picks up occlusions from fine details in normal maps. Put in simple terms, ambient light occlusion can be described as the parts of the scene where light finds it hard to reach. In the real world, light has to bounce off many surfaces in order to reach some places. Classically this problem is solved with a radiosity technique but this is usually too expensive for real-time applications. For this reason, various screen space techniques have been invented to emulate the effect of ambient occlusion.

For the sake of including past-generation products in our test results, we've restrained the STALKER: CoP benchmark to DirectX-10 level settings with SSAO. There are three SSAO modes: Default, HBAO, and HDAO; this test uses Default. Each mode then has three SSAO levels of detail: Low, Medium, High, and specific to HDAO you can add Ultra. Our tests use the Default-High settings, which rank #3 out of 10 SSAO levels. Using DirectX-10 lighting and Default-High SSAO settings, the HD4890 actually comes amazingly close to the Radeon HD5850 and NVIDIA GeForce GTX480 level of performance. While it's understandable that NVIDIA may not have optimized DX10 performance in their GeForce GTX480 beta driver, the opposite is true for Forceware 197.13 and the other GeForce products. It's suspicious that a past-generation ATI Radeon HD4890 DX10 video card can dramatically outperform all of NVIDIA's DX10 products, even the dual-GPU GeForce GTX295. My commentary of these test results ends here, and you can draw your own conclusion. Next are the DirectX-11 benchmark tests...

S.T.A.L.K.E.R. Call of Pripyat is a video game based on the DirectX-11 architecture, and designed to use high-definition SSAO. Our DX11 tests utilize the highest settings possible, with HDAO mode set to use Ultra SSAO quality. Although this benchmark runs through all four test scenes (Day, Night, Rain, and Sun Shafts), only Day and Night are reported in our chart above. Test Summary: Something's not right with S.T.A.L.K.E.R. Call of Pripyat. Either the X-Ray game engine or the video game itself are heavily optimized for ATI Radeon video cards and not representative of relative performance we seen with other game titles. If you play S.T.A.L.K.E.R. Call of Pripyat with a DX10 video card, the ATI Radeon series clearly carries more weight. Nevertheless, NVIDIA's GeForce GTX480 Fermi video card holds its own in DX11 tests with HDAO set to Ultra, and improves upon the non-compliant GTX285 by 120% in night scenes and, 108% during daytime scenes.

Unigine Heaven BenchmarkThe Unigine "Heaven 2.0" benchmark is a free publicly available tool that grants the power to unleash the graphics capabilities in DirectX-11 for Windows 7 or updated Vista Operating Systems. It reveals the enchanting magic of floating islands with a tiny village hidden in the cloudy skies. With the interactive mode, emerging experience of exploring the intricate world is within reach. Through its advanced renderer, Unigine is one of the first to set precedence in showcasing the art assets with tessellation, bringing compelling visual finesse, utilizing the technology to the full extend and exhibiting the possibilities of enriching 3D gaming. The distinguishing feature in the Unigine Heaven benchmark is a hardware tessellation that is a scalable technology aimed for automatic subdivision of polygons into smaller and finer pieces, so that developers can gain a more detailed look of their games almost free of charge in terms of performance. Thanks to this procedure, the elaboration of the rendered image finally approaches the boundary of veridical visual perception: the virtual reality transcends conjured by your hand. The "Heaven" benchmark excels at providing the following key features:

Just like we've already done in BattleForge and S.T.A.L.K.E.R. Call of Pripyat, the Unigine "Heaven" benchmark was reduced to DirectX-10 levels to make sure everyone had a fair chance. The Heaven benchmark is a free demo that makes use of the Unigine game engine, and is designed to show off the most detailed cobblestone and smoke you've ever seen a graphics card generate... in DirectX-11. Down-graded to DirectX-10 performance, our test results (above) indicate trending similar to 3dMark Vantage, and keep the NVIDIA GeForce GTX480 only two frames from the Radeon HD5870.

Although Heaven-2.0 was recently released and used for our DirectX-11 tests, the benchmark results were extremely close to those obtained with Heaven-1.0 testing. Since only DX11-compliant video cards will properly test on the Heaven benchmark, only those products that meet the requirements have been included. The ATI Radeon HD5850 establishes a 24.1 FPS baseline, which is increased to 29.9 FPS by the Radeon HD5870. Placed between ATI's best single-GPU video card and their dual-GPU Radeon HD5970, the NVIDIA GeForce GTX480 delivers 37.4 FPS. While the Unigine Heaven benchmark is a sample for what a game developer could do with their engine, as of now it's merely a synthetic benchmark with the same punctuation as 3dMark Vantage. Test Summary: NVIDIA has been keen to promote the Unigine Heaven benchmark tool, as it appears to deliver a fair comparison of DirectX-11 test results. The NVIDIA GeForce GTX480 clears past the Radeon HD5870 by 25%, and trails the dual-GPU Radeon HD5970 by a mere 10 FPS. Reviewers like to say "Nobody plays a benchmark", but it seems evident that we can expect to see great things come from a tool this detailed. For now though, those details only come by way of DirectX-11 video cards.

NVIDIA APEX PhysX EnhancementsMafia II is the first PC video game title to include the new NVIDIA APEX PhysX framework, a powerful feature set that only GeForce video cards are built do deliver. While console versions will make use of PhysX, only the PC version supports NVIDIA's APEX PhysX physics modeling engine, which adds the following features: APEX Destruction, APEX Clothing, APEX Vegetation, and APEX Turbulence. PhysX helps make object movement more fluid and lifelike, such as cloth and debris. In this section, Benchmark Reviews details the differences made with- and without APEX PhysX enabled. We begin with a scene from the Mafia II benchmark test, which has the player pinned down behind a brick column as the enemy shoots at him. Examine the image below, which was taken with a Radeon HD 5850 configured with all settings turned to their highest and APEX PhysX support disabled:

No PhysX = Cloth Blending and Missing DebrisNotice from the image above that when PhysX is disabled there is no broken stone debris on the ground. Cloth from foreground character's trench coat blends into his leg and remains in a static position relative to his body, as does the clothing on other (AI) characters. Now inspect the image below, which uses the GeForce GTX 460 with APEX PhysX enabled:

Realistic Cloth and Debris - High Quality Settings With PhysXWith APEX PhysX enabled, the cloth neatly sways with the contour of a characters body, and doesn't bleed into solid objects such as body parts. Additionally, APEX Clothing features improve realism by adding gravity and wind effects onto clothing, allowing for characters to look like they would in similar real-world environments.

Burning Destruction Smoke and Vapor RealismFlames aren't exactly new to video games, but smoke plumes and heat vapor that mimic realistic movement have never looked as real as they do with APEX Turbulence. Fire and explosions added into a destructible environment is a potent combination for virtual-world mayhem, showcasing the new PhysX APEX Destruction feature.

Exploding Glass Shards and Bursting FlamesNVIDIA PhysX has changed video game explosions into something worthy of cinema-level special effects. Bursting windows explode into several unique shards of glass, and destroyed crates bust into splintered kindling. Smoke swirls and moves as if there's an actual air current, and flames move out towards open space all on their own. Surprisingly, there is very little impact on FPS performance with APEX PhysX enabled on GeForce video cards, and very little penalty for changing from medium (normal) to high settings. NVIDIA 3D-Vision EffectsReaders familiar with Benchmark Reviews have undoubtedly heard of NVIDIA GeForce 3D Vision technology; if not from our review of the product, then for the Editor's Choice Award it's earned or the many times I've personally mentioned it in out articles. Put simply: it changes the game. 2010 has been a break-out year for 3D technology, and PC video games are leading the way. Mafia II is expands on the three-dimensional effects, and improves the 3D-Vision experience with out-of-screen effects. For readers unfamiliar with the technology, 3D-Vision is a feature only available to NVIDIA GeForce video cards.

The first thing gamers should be aware of is the performance penalty for using 3D-Vision with a high-demand game like Mafia II. Using a GeForce GTX 480 video card for reference, currently the most powerful single-GPU graphics solution available, we experienced frame rate speeds up to 33 FPS with all settings configured to their highest and APEX PhysX set to high. However, when 3D Vision is enabled the video frame rate usually decrease by about 50%. This is no longer the hardfast rule, thanks to '3D Vision Ready' game titles that offer performance optimizations. Mafia II proved that the 3D Vision performance penalty can be as little as 30% with a single GeForce GTX 480 video card, or a mere 11% in SLI configuration. NVIDIA Forceware drivers will guide players to make custom-recommended adjustments specifically for each game they play, but PhysX and anti-aliasing will still reduce frame rate performance.

Of course, the out-of-screen effects are worth every dollar you spend on graphics hardware. In the image above, an explosion sends the car's wheel and door flying into the players face, followed by metal debris and sparks. When you're playing, this certainly helps to catch your attention... and when the objects become bullets passing by you, the added depth of field helps assist in player awareness.

Combined with APEX PhysX technology, NVIDIA's 3D-Vision brings destructible walls to life. As enemies shoot at the brick column, dirt and dust fly past the player forcing stones to tumble out towards you. Again, the added depth of field can help players pinpoint the origin of enemy threat, and improve response time without sustaining 'confusion damage'.

NVIDIA APEX Turbulence, a new PhysX feature, already adds an impressive level of realism to games (such as with Mafia II pictured in this section). Watching plumes of smoke and flames spill out towards your camera angle helps put you right into the thick of action.

NVIDIA 3D-Vision/3D-Vision Surround is the perfect addition to APEX PhysX technology, and capable video games will prove that these features reproduce lifelike scenery and destruction when they're used together. Glowing embers and fiery shards shooting past you seem very real when 3D-Vision pairs itself APEX PhysX technology, and there's finally a good reason to overpower the PCs graphics system. GeForce GTX480 TemperaturesBenchmark tests are always nice, so long as you care about comparing one product to another. But when you're an overclocker, or merely a hardware enthusiast who likes to tweak things on occasion, there's no substitute for good information. Benchmark Reviews has a very popular guide written on Overclocking Video Cards, which gives detailed instruction on how to tweak a graphics cards for better performance. Of course, not every video card has overclocking head room. Some products run so hot that they can't suffer any higher temperatures than they already do. This is why we measure the operating temperature of the video card products we test. FurMark does do two things extremely well: drive the thermal output of any graphics processor higher than any other application of video game, and it does so with consistency every time. While I have proved that FurMark is not a true benchmark tool for comparing video cards, it would still work very well to compare one product against itself at different stages. FurMark would be very useful for comparing the same GPU against itself using different drivers or clock speeds, of testing the stability of a GPU as it raises the temperatures higher than any program. But in the end, it's a rather limited tool.

NVIDIA GeForce GTX 480 Temperature ResultsNVIDIA-supplied product specifications state that the GeForce GTX 480 has a maximum GPU thermal threshold of 105°C. This is identical for the previous-generation GeForce GTX 285, as well as the GeForce GTX 470 that shares the same GF100 graphics processor. To begin my testing, I use GPU-Z to measure the temperature at idle as reported by the GPU. Next I use FurMark to generate maximum thermal load and record GPU temperatures at high-power 3D mode. The ambient room temperature remained at a stable 20.0°C throughout testing, while the inner-case temperature hovered around 37°C. The NVIDIA GeForce GTX 480 Fermi video card recorded a very warm 53°C in idle 2D mode (matching the inner case temp), and increased to 93°C in sustained full 3D mode. In comparison to the departing-generation GeForce GTX 285 and the competing ATI Radeon HD 5870, these temperatures are much higher at both idle and load. The GeForce GTX 285 hovers at 38°C, while the Radeon HD 5870 reports only 33°C at idle. Under full load, the GeForce GTX 285 climbs to 86°C, while the Radeon HD 5870 works at 80°C. Despite the 40nm process, GF100 runs hot. This could certainly effect overclocking projects, and may also increase the likelihood for graphics instability inside poorly ventilated computer cases. If you've already got a warm-blooded CPU in your computer system, take look at our Best CPU Cooler Performance series and find a heatsink that will lower your internal temperatures and prolong component lifetime. Lowering the internal case temperature could give you an added edge for GPU overclocking projects, and it will also help overall system stability. VGA Power ConsumptionLife is not as affordable as it used to be, and items such as gasoline, natural gas, and electricity all top the list of resources which have exploded in price over the past few years. Add to this the limit of non-renewable resources compared to current demands, and you can see that the prices are only going to get worse. Planet Earth is needs our help, and needs it badly. With forests becoming barren of vegetation and snow capped poles quickly turning brown, the technology industry has a new attitude towards turning "green". I'll spare you the powerful marketing hype that gets sent from various manufacturers every day, and get right to the point: your computer hasn't been doing much to help save energy... at least up until now. To measure isolated video card power consumption, Benchmark Reviews uses the Kill-A-Watt EZ (model P4460) power meter made by P3 International. A baseline test is taken without a video card installed inside our computer system, which is allowed to boot into Windows and rest idle at the login screen before power consumption is recorded. Once the baseline reading has been taken, the graphics card is installed and the system is again booted into Windows and left idle at the login screen. Our final loaded power consumption reading is taken with the video card running a stress test using FurMark. Below is a chart with the isolated video card power consumption (not system total) displayed in Watts for each specified test product:

* Results are accurate to within +/- 5W.

NVIDIA-supplied product specifications state a 250W Max Board Power (TDP), and suggest a 600W power supply unit. For power consumption tests, Benchmark Reviews utilizes the 80-PLUS GOLD certified OCZ Z-Series Gold 850W PSU, model OCZZ850. This power supply unit has been tested to provide over 90% typical efficiency by Chroma System Solutions. At idle the NVIDIA GeForce GTX 480 Fermi video card used 52 watts of electricity, and perhaps among the highest idle power draw we've measured for DirectX-11 generation graphics cards. This level of consumption is slightly higher than the 48W we measured for the dual-GPU ATI Radeon HD 5970, and more than twice the demand of ATI's Radeon HD5870 and HD5850. Compared against the departing GeForce GTX 285, the new GTX 480 adds-on about 20 additional watts at idle. Fermi certainly has a big power appetite when it should be snacking on only a few watts. Once 3D-applications begin to demand power from the GPU, electrical power consumption really begins to climb. Measured at full 3D load, the GeForce GTX 480 sets a new maximum power record and consumes 370 watts. Although Fermi features a 40nm fabrication process, there's nothing 'Green' about the power demand under load. Sure, the performance-per-watt ratio is higher on the GTX480 than the other cards, but it comes at a price. Putting things into perspective, though, the enthusiast PC gamer who can afford the NVIDIA GeForce GTX 480 probably isn't very worried about a few extra dollars on his power bill each month. To be fair, our GeForce GTX 480 was an engineering sample, and although several other reviewers I've discussed this with have experienced the same high power consumption, it's unclear if retail parts will be built from the same yield. Editor's Opinion: Fermi GF100NVIDIA heard the dinner bell ring many months ago when Microsoft introduced DirectX-11 along side Windows 7, and they've been crawling to the table ever since. Details of the new NVIDIA Fermi GPU architecture were first leaked out to the Web as early as September 2009, which makes exactly half a year between myth and reality. ATI helped set the table with their Radeon HD 5000 series, and even enjoyed some appetizers while a few DX11 games were released, but NVIDIA managed to take a seat just in time for supper. Unfortunately for NVIDIA, ATI showed up wearing its best Sunday blue's, while Fermi's suit is still at the cleaners. None of this really matters though, because now NVIDIA can eat as much as they want. My analogy plays out well you consider the facts behind GF100 and the launch of NVIDIA's GeForce GTX470/480. AMD may not have launched with more than a few hundred full-fledged 40nm ATI Cypress-XT GPUs having all eighty texture units, but they made it to market first and created a strong consumer demand for a limited supply of parts. NVIDIA decided on an alternate route, and binned their GPU yields based on streaming multiprocessors. The GF100 GPU is designed to have 16 streaming multiprocessors and 512 discrete cores, and while the Fermi architecture is still in-tact, there's one SMP disabled on the GeForce GTX 480, and two SMPs disabled on the GTX 470. The world has yet to see what the full 512 cores can accomplish, although NVIDIA is already revolutionizing the military with CUDA technology. So now ATI and NVIDIA are even-Steven in the running for DirectX-11, and all that they need are video games to increase demand for their product. This becomes a real problem (for them both) because very few existing games demand any more graphical processing power than games demanded back in 2006. Video cards have certainly gotten bigger and faster, but video games has lacked fresh development. DirectX-10 helped the industry, but every step forward received two steps back because of the dislike for Microsoft's Windows Vista O/S. Introduced with Windows 7 (and also available for Windows Vista with an update), enthusiasts now have DirectX-11 detail and special effects in their video games.

ASUS ENGTX480/2DI/1536MD5 GeForce GTX 480 Graphics KitEven if you're only after raw gaming performance and have no real-world interest in CUDA, there's reason to appreciate the GF100 GPU. New enhancement products, such as the NVIDIA GeForce 3D Vision Gaming Kit, double the demands on frame rate output and hence require more powerful graphics processing. This is where products like the GeForce GTX470 and GTX480 deliver the performance necessary to enjoy the extended gaming experience. I'm a huge fan of GeForce 3D-Vision, which is why it's earned our Editor's Choice Award, and Fermi delivers the power necessary to drive up to three monitors. The newly dubbed NVIDIA 3D-Vision Surround (stereo) requires three 3D-Vision capable LCD, projector, or DLP devices and offers bezel correction support. Alternatively, NVIDIA Surround (non-stereo) supports mixed displays with common resolution/timing. Even some older game titles benefit by the Fermi GF100 GPU, beyond just an increase in frame rates. For example, Far Cry 2 will receive 32x CSAA functionality native to the game, but future NVIDIA Forceware driver updates could also further add new features into existing co-developed video games. Additionally, NVIDIA NEXUS technology brings CPU and GPU code development together in Microsoft Visual Studio 2008 for a shared process timeline. NEXUS also introduces the first hardware-based shader debugger. NVIDIA's GF100 is the first GPU to ever offer full C++ support, the programming language of choice among game developers. Fermi isn't for everyone. Many of NVIDIA's add-in card partners (what they call AICs) have already built inventory of the GeForce GTX 480. On 12 April 2010 ASUS will reveal the ENGTX480/2DI/1536MD5 GeForce GTX 480 graphics card kit, which online retailers are expected to price at around $500 for the 90-C3CH90-W0UAY0KZ SKU. The ASUS ENGTX470/2DI/1280MD5 kit (GeForce GTX 470) loses only one (more) SMP, but the price for their 90-C3CHA0-X0UAY0KZ kit drops to $350. While not based on anything other than these two prices, it seems that a full 16-SMP 512-core version could receive the GeForce "GTX-490" name and a price tag around $650. Sure to be an expensive enthusiast product if and when it ever gets made, the GeForce "GTX-490" could keep company with the $1130 recently-announced Intel Core i7-980X 6-Core CPU BX80613I7980X. Fermi is also the first GPU to support Error Correcting Code (ECC) based protection of data in memory. ECC was requested by GPU computing users to enhance data integrity in high performance computing environments. ECC is a highly desired feature in areas such as medical imaging and large-scale cluster computing. Naturally occurring radiation can cause a bit stored in memory to be altered, resulting in a soft error. ECC technology detects and corrects single-bit soft errors before they affect the system. Fermi's register files, shared memories, L1 caches, L2 cache, and DRAM memory are ECC protected, making it not only the most powerful GPU for HPC applications, but also the most reliable. In addition, Fermi supports industry standards for checking of data during transmission from chip to chip. All NVIDIA GPUs include support for the PCI Express standard for CRC check with retry at the data link layer. Fermi also supports the similar GDDR5 standard for CRC check with retry (aka "EDC") during transmission of data across the memory bus. The true potential of NVIDIA's Fermi architecture has still yet to be seen. Sure, we've already poked around at the inner workings for our NVIDIA GF100 GPU Fermi Graphics Architecture article, but there's so much more that goes untested. Heading into April 2010, only a private alpha version of the Folding@Home client is available. The difference between work unit performance on the GeForce GTX 480 is going to surpass ATI's Radeon HD 5870 without much struggle, but it's uncertain how much better the performance will be compared to the previous-generation GeForce GTX 285. Until the GeForce GTX470/480 appears on retail shelves, and until a mature GeForce 400-series WHQL Forceware driver is publicly available, many of the new technologies introduced will remain untapped. GeForce GTX-480 ConclusionAlthough the rating and final score mentioned in this conclusion are made to be as objective as possible, please be advised that every author perceives these factors differently at various points in time. While we each do our best to ensure that all aspects of the product are considered, there are often times unforeseen market conditions and manufacturer changes which occur after publication that could render our rating obsolete. Please do not base any purchase solely on our conclusion, as it represents our product rating for the sample received which may differ from retail versions. Benchmark Reviews begins our conclusion with a short summary for each of the areas that we rate. Our performance rating considers how effective the GeForce GTX480 DirectX-11 video card performs against competing products from both ATI and NVIDIA. While it's not easy to nail-down exact ratios because of driver and game optimizations, the GeForce GTX480 consistently outperformed the ATI Radeon HD5870 and establishes itself as the most powerful single-unit graphics card available. Tested on the unbiased 3dMark Vantage DX10 benchmark, GeForce GTX480 improves upon the GTX285 by nearly 61% at 1920x1200, and outperforms the Radeon HD5870 by 10%. When BattleForge calls high-strain SSAO into action, NVIDIA's GTX480 demonstrates how well Fermi is suited for DX11... improving upon the GeForce GTX285 by nearly 249% while trumping ATI's best single-GPU Radeon HD5870 by 61%. The GeForce GTX480 also proved itself a worthy adversary for the dual-GPU ATI Radeon HD5970; beating it in our Resident Evil 5, Far Cry 2, and BattleForge tests.

In regard to product appearance, there's no doubting that NVIDIA's GeForce GTX 480 will be referred to as the 'toughest' video card they've produced. Exposed heatsink and heat-pipes certainly stand out like exposed exhaust tips do for hot rods, and AICs (such as ASUS) have already decorated the plastic shroud with faux-Carbon Fiber finishes. It remains to be see how created the add-in card partners will be, but variety is guaranteed. Riding the bleeding edge of technology, NVIDIA has built their GeForce GTX 480 (and likely the GTX 470, too) with solid construction. I'm always concerned for exposed electronics, so it surprises me that they didn't integrate a metal back-plate to add as a heatsink and guard for the PCB components. The top-side of the graphics card features a in-laid heatsink and four exposed heat-pipe rods with one more tucked inside the shroud; all of which get extremely hot. The Fermi GF100 GPU has been moved forward (towards the exhaust vents) by one inch when compared to the GTX 285, which allows the memory and power components to receive optimal cooling first. While most consumers buy a discrete graphics card for the sole purpose of PC video games, there's a very small niche who expect extra features beyond video fast frame rates. NVIDIA is the market leader in GPGPU functionality, and it's no surprise to see CPU-level technology available in their GPU products. Fermi GF100 is also the first GPU to ever support Error Correcting Code (ECC), a feature that benefits both personal and professional users. Proprietary technologies such as NVIDIA Parallel DataCache and NVIDIA GigaThread Engine further add value to GPGPU functionality. The NVIDIA GeForce GTX 480 video card will be officially available from retailers on 12 April 2010 or sooner. NVIDIA suggests a retail price of $500 for the GeForce GTX 480, and $350 for the GTX 470. Priced at $499.99 each, NewEgg currently lists: the Zotac ZT-40101-10P, ASUS ENGTX480, EVGA 015-P3-1480-AR, MSI N480GTX-M2D15, Gigabyte GV-N480D5-15I-B, and even the PNY VCGGTX480XPB. XFX and BFG are not planning to co-brand the GeForce GTX 480, and are also the only manufacturers to offer a lifetime product warranty. In terms of value, there are several ways to look at the GeForce GTX 480 and compare it to the closest rival: the $420 ATI Radeon HD 5870. Some analysts take heat and power into consideration, but for this illustration we'll use only the FPS performance from our tests. Based on the in-game DirectX-11 BattleForge benchmark, gamers who play at 1680x1050 will pay $6.79 per frame of performance with the GeForce GTX 480, as opposed to $9.29 per frame with the Radeon HD 5870. Playing single-player Battlefield: Bad Company 2 at 1920x1200 with maximum settings, you can expect to pay $6.59 with the GeForce GTX 480, or $6.77 with the Radeon HD 5870. Far Cry 2 costs $6.38 per frame for the GeForce GTX 480, while the ATI Radeon HD 5970 costs $8.05 per frame. The GeForce GTX 480 may cost more up front, but it delivers more for the money with regard to video game performance. In summary, NVIDIA's GF100 Fermi GPU delivers more than just a giant boost to video frame rates over their previous generation, it also delivers GPGPU functionality that is usable in- and outside of video games. Performance increases over the GeForce GTX 285 were dramatic, and often added nearly 300% improvements. Comparing only PC video game frame rate performance between the GTX480 and Radeon HD5870 still puts the 480-core GF100 GPU decisively ahead of the competition by 22-98% in DX10 tests, and 12-63% in DX11. As a whole, NVIDIA video cards generally performed better on the most popular video game titles; and there were at least two games where the GeForce GTX480 performed better than ATI's dual-GPU Radeon HD 5970. There are also added PhysX and DirectX-11 enhancements to help tip the cup well into NVIDIA's favor in terms of user-experience. Conclusion: NVIDIA is back on top again, right where most gamers like to see them. EDITOR'S NOTE: Since testing NVIDIA's engineering sample for this article, we've received retail GeForce GTX-480 products that perform the same but require less power and produce less heat and noise. Read more in our Zotac GeForce GTX-480 Fermi Video Card review, which also includes SLI performance results. Pros:

+ Fastest single-unit DX11 graphics accelerator available Cons:

- Consumes 52W at idle and 370W under full load Benchmark Reviews encourages you to leave comments (below), or ask questions and join the discussion in our Forum.

Related Articles:

|

|||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

Comments

1. power useage, when they inevitably use a lot of power sucking add ons - printers, joysticks, and multiple monitors ?

HOW STUPID does a noob have to be to whine about power from one component ? PRETTY STUPID.

2. fan noise... as they crank their speakers to maximum, enjoy the 3D sound environment, or have their headphones on while they scream into the mic about who they just fragged to their teammates.

3. Heat... after years of reviews and building systems and selling, not once have I seen some idiot post that any card they were using expired from H E A T.

It's always some notional crazed ati fanboy geek preening about saving the earth,or wailing about less electricity cost than they jam down their throats in cheetoes and soda in a single frag session.

AVOID high end cards, and get yer $299 netbook tweaked for Pogo online.

I don't see "blazing fast" anywhere, but I see "blazing hot" as a better descriptor. In games the 5970 is still killing it, and using about 70 watts less power. I would have to choose a 5970 over this new nVidia card at the moment. We waited, and waited, and all we got was a campfire that needs a lot of wood to burn.

The heat is pretty bad however. Nvidia says that they tested the gpu on that load heat extensively.

The other problems that the 5970 has is microstuttering. They've done an analysis that shows spikes even when vsync is turned on. Aside from heat and power consumption, the 480 is a pretty decent choice in gaming hardware. People who've left reviews of it on newegg have said that it isn't as loud as people make it out to be.

With those two the ati card gets stomped on every level, and the 470 beats the reds bigger brother often enough.

All the bleating red roosters won't stop the awesome NV sales numbers.

For now, it's red rooster cry and lie time.

Stomp the three toed chicken feet, and bleat like a mad modder, and all for nought, the SUPERSTOMP has already occurred, and no amount of red rooster bs can change that. (you probably believe yourself to keep from weeping)

You should go test for yourself before trying to discredit somebody else's hard work.

- Always hits 97C regardless of case cooling when gaming.

- Super LOUD Fan (65db?s +/- 2db).

- Never buy without Lifetime Warranty (source: Anantech)

- 10% to 15% faster in some cases vs. HD 5870 (source: 15+ review sites)

- Price/Performance stinks beyond belief (source: 15+ review sites)

- 250W is complete BS, its 300W+, NVIDIA lied (source: Toms Hardware)

- Nvidia's Fermi GTX480 is Broken, Unfixable, Hot, Slow, Late and Un-manufacturable.

this card has a single gpu and differnce between it and the 5970(2 gpu)

is only a 4 to 8 frames only the nivdia is a single u noob :S

nvidia can shove this up their ass. whats the point of this failure card?

wow its a little faster then the hd5970.

no where near fast enough. its sad

Perhaps by coincidence XFX and BFG are not listing the GeForce GTX 480 at NewEgg; and are the only manufacturers to offer a lifetime product warranty.

Check here:

#digitalstormonline.com/comploadsaved.asp?id=395218

YOU MUST BUY A V-CARD by it's speed not by the it's MONO gpu property. as what NVIDIA said before "Two is Better than One" on their SLI motto.

The merge of AMD and ATI gives ATI a larger R&D division and have an independent fabs while NVIDIA uses a fabs by TSMC to produce GPU's.

ATI before was a Buggy Videocard Manufaturer making color shifts on my monitor and now becoming a hard competitor of NVIDIA

Another Comment:

Intel must buy NVIDIA to beat the One and hard competitor AMD

Anybody buying this piece of garbage is pure NVIDIA FANbOy LOL

People who buy it want the fastest single gpu but will have to factor in heat and power consumption. They wouldn't be fanboys as only fanboys buy from one company and ignore facts so they can diss the competition. Like what you did.

Based on what? It wasn't this article, because the GTX 480 beat the Radeon HD5870 by an average of 25%.

480gtx HD5970

Single 1.5gb memory basically dual 1gb(2gb) memory

Core Clock 725MHz Core Clock 725MHz

Memory Interface 384bit Memory Interface(256 x 2)-bit

$250 for more memory, less heat, less noise, less power consumption.

my gtx 285 will do the job for a while to come, to me, when im running at 50fps+ why on earth would i need to run 90% faster? its a waste of money.

Despite the 40nm process, three key features that will be based on Evergreen have been put on steroids. Please observe bellow:

- 40nm Process

- 2400 Stream Processing Units (1)

- 256 Texture Address Units (2)

- 128 ROPs (Rasterization Operator Units) (3)

AMD plans to differentiate this re-fresh line from the past HD 4890 naming sceme due to its architectural changes. So don't expect the name HD 5890.