| XFX Radeon HD5770 Video Card HD-577A-ZN |

| Reviews - Featured Reviews: Video Cards | ||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

| Written by Bruce Normann | ||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

| Wednesday, 28 October 2009 | ||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

XFX Radeon HD 5770 ReviewOK, we're through playing nice with these new ATI 5xxx video cards. The corporate logo for XFX says: "play hard.", so Benchmark reviews is going to take that motto to heart and show what this cards can really do. Almost every single competing card runs at higher than reference clock rates, and they all come that way from the factory. Every single card I compared the HD5770 to, when we reviewed the reference design from ATI, was factory overclocked. That's just the way it is with video cards built on mature GPU technology. Well, every ATI 5xxx card can easily be overclocked using the standard driver package from ATI, Catalyst Control Center, since it includes ATI Overdrive. So let's do it, let's compare apples to apples, and as a bonus, I'll throw in some CrossfireX results, too.

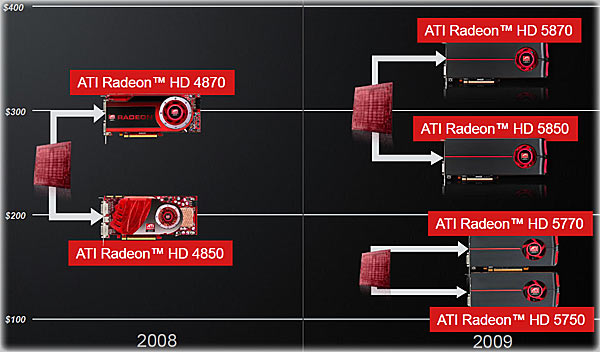

The HD57xx mid-range cards compete in a crowded market with several competitors overlapping into their performance and price zones. There's a complex mix of old standards and new stars, and of course, each vendor has a different take on factory overclocking. Also, the market shifts every day; sometime imperceptibly, sometimes radically. The launch of the HD5xxx chips was a radical shift and we're still seeing ripples in the marketplace as the full performance capabilities of the new boards are explored. We looked at the standard performance envelope previously, now let's explore a bit beyond those boundaries. About the company: XFX

|

||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

|

Product Series |

XFX Radeon HD5750 (HD-575X-ZN) |

XFX Radeon HD5770 (HD-577A-ZN) |

XFX Radeon HD5770 (HD-577A-ZN) OC |

ASUS GeForce GTX 260 (ENGTX260 MATRIX) |

MSI GeForce GTX 275 (N275GTX Twin Frozr OC) |

ASUS GeForce GTX 285 (GTX285 MATRIX) |

|

Stream Processors |

720 |

800 |

800 |

216 |

240 |

240 |

|

Core Clock (MHz) |

700 |

850 |

930 |

576 |

666 |

662 |

|

Shader Clock (MHz) |

N/A |

N/A |

N/A |

1242 |

1476 |

1476 |

|

Memory Clock (MHz) |

1150 |

1200 |

1250 |

999 |

1161 |

1242 |

|

Memory Amount |

1024MB - GDDR5 |

1024MB - GDDR5 |

1024MB-GDDR5 |

896MB - GDDR3 |

896MB - GDDR3 |

1024MB - GDDR3 |

|

Memory Interface |

128-bit |

128-bit |

128-bit |

448-bit |

448-Bit |

512-bit |

-

XFX Radeon HD5750 (HD-575X-ZN - Catalyst 8.66.6_Beta1)

-

XFX Radeon HD5770 (HD577A-ZN - Catalyst 8.66.6_Beta1)

-

ASUS GeForce GTX 260 (ENGTX260 MATRIX - Forceware v190.62)

-

MSI GeForce GTX 275 (N275GTX Twin Frozr OC - Forceware v190.62)

-

ASUS GeForce GTX 285 (GTX285 MATRIX - Forceware v190.62)

Overclocking the Radeon HD5770

Time was, overclocking was sort of a mysterious pastime of a small group of fanatical techies. Then the Intel Core2 Duo CPU hit the market and suddenly overclocking became less of a dangerous adventure and more of a birthright. In the video card world, it was not the arrival of overclocking friendly hardware that broke the ice, it was software utilities. They were supplied initially by third parties, then by the card vendors themselves, and now they come direct from the GPU manufacturer. For the first couple years, some had pretty awful interfaces and some didn't work too reliably, but these days the software from all three sources is of decent quality. I've reviewed the ASUS iTracker and msi products and they were both useful, and there's always the old stand-by, Riva-Tuner.

After reviewing my options, since XFX does not publish their own version of an overclocking utility for the new ATI 5xxx series of cards, I used Catalyst Control Center supplied by ATI, which works with every 5xxx series card. It doesn't allow the user to change GPU voltage, but it does have a fan control setting integrated in the application. I bumped the fan up to 100% at the beginning; better to explore the overclocking limits with all available cooling than to overcook the card while testing. There is an auto tune feature included, but I'm the impatient type, who likes full control, so I just dove right in and started cranking things up.

One of the anxious moments every overclocker has, is when the CPU, GPU, or memory locks up or starts spitting out random bits. The second anxious moment follows soon after, when it's time to restart the system. Especially with video cards, because they don't provide access to BIOS screens during POST and boot sequences. Most cards don't even have a way of resetting the BIOS, with a hardware jumper or otherwise. So, there is a possibility of turning that shiny new hunk of high tech into what is known in the industry as a "brick". Fortunately, although I crashed the XFX HD5770 several times while pushing it over the limit, it rebounded each time and asked for more....errr, less. Eventually the XFX HD5770 and I came to the conclusion that a 930MHz GPU clock and a 1250 MHz memory clock would be stable in all gaming and benchmarking situations. I had hoped for a bit more speed on the memory, but I think a few extra millivolts are required, and that capability is not currently available.

Leaving well enough alone is NOT the way most gamers and computer enthusiasts think, it's more like, "If it ain't broke, crank it up some more." So, we did that already, what now? The answer for the last several years has been, "Buy another one and hook ‘em together." CrossfireX and a spare HD5770 came to the rescue, and the combination did not disappoint. The 5770 scales very well in CrossfireX, and the installation and setup could not have been any easier. Once the system was running with one HD5770, I shut it down, plugged the second card in, attached the flexi-bridge, and restarted. Once Windows started up, Catalyst Control Center popped open and informed me that I had two GPUs running in CrossfireX, and asked if that was alright. I said yes; who wouldn't? From that point on, it was seamless, and the performance was amazing, even with stock, reference clocks. Once you see the results, I think you'll agree that this is a giant killer, in the ATI tradition. Remember the HD4770 in CrossfireX?

Now we're ready to begin testing video game performance on these video cards, so please continue to the next page as we start off with our 3DMark Vantage results.

3DMark Vantage Benchmark Results

3DMark Vantage is a computer benchmark by Futuremark (formerly named Mad Onion) to determine the DirectX 10 performance of 3D game performance with graphics cards. A 3DMark score is an overall measure of your system's 3D gaming capabilities, based on comprehensive real-time 3D graphics and processor tests. By comparing your score with those submitted by millions of other gamers you can see how your gaming rig performs, making it easier to choose the most effective upgrades or finding other ways to optimize your system.

There are two graphics tests in 3DMark Vantage: Jane Nash (Graphics Test 1) and New Calico (Graphics Test 2). The Jane Nash test scene represents a large indoor game scene with complex character rigs, physical GPU simulations, multiple dynamic lights, and complex surface lighting models. It uses several hierarchical rendering steps, including for water reflection and refraction, and physics simulation collision map rendering. The New Calico test scene represents a vast space scene with lots of moving but rigid objects and special content like a huge planet and a dense asteroid belt.

At Benchmark Reviews, we believe that synthetic benchmark tools are just as valuable as video games, but only so long as you're comparing apples to apples. Since the same test is applied in the same controlled method with each test run, 3DMark is a reliable tool for comparing graphic cards against one-another.

1680x1050 is rapidly becoming the new 1280x1024. More and more widescreen are being sold with new systems or as upgrades to existing ones. Even in tough economic times, the tide cannot be turned back; screen resolution and size will continue to creep up. Using this resolution as a starting point, the maximum settings were applied to 3DMark Vantage include 8x Anti-Aliasing, 16x Anisotropic Filtering, all quality levels at Extreme, and Post Processing Scale at 1:2.

No, you're not seeing double....well, actually you are seeing double. As in double HD5770 cards linked in CrossfireX; I thought that big yellow bar on the right would get your attention. Let's get this out of the way right now. Two HD5770 cards can beat up on just about any single-GPU solution out there. In every benchmark, there was no contest between the two HD5770 cards and the GTX285 card under test. You'll see. Now, back to our regularly scheduled program.

The two test scenes in 3DMark Vantage provide a varied and modern set of challenges for the video cards and their subsystems, as described above. The results always produced higher frame rates for GT1 and so far, I haven't seen any curveball results like I used to see with 3DMark06. The XFX Radeon HD5770 finally comes to grips with the GTX260 card in both GT1 and GT2 when it's overclocked. In both test cases, the HD5770 easily beats the HD5750; there's no pretending that it's close, the extra stream processors in the HD5770 really do make a difference. The GTX275 and GTX285 pull away from the middle of the pack, as they should for the price difference. Twinned HD5770s take the prize home, though.

At a higher screen resolution of 1920x1200, the story changes a bit, as the overclocked HD5770 pulls out a small lead on the GTX260 in both tests. The 128-bit memory bus doesn't seem to hurt the card with higher resolutions. The HD5750 falls well behind in this company, as you might expect. It's worth keeping it in the testing mix, just to retain a sense of perspective. We need to look at actual gaming performance to verify these results, so let's take a look in the next section, at how these cards stack up in the standard bearer for gaming benchmarks, Crysis.

|

Product Series |

XFX Radeon HD5750 (HD-575X-ZN) |

XFX Radeon HD5770 (HD-577A-ZN) |

XFX Radeon HD5770 (HD-577A-ZN) OC |

ASUS GeForce GTX 260 (ENGTX260 MATRIX) |

MSI GeForce GTX 275 (N275GTX Twin Frozr OC) |

ASUS GeForce GTX 285 (GTX285 MATRIX) |

|

Stream Processors |

720 |

800 |

800 |

216 |

240 |

240 |

|

Core Clock (MHz) |

700 |

850 |

930 |

576 |

666 |

662 |

|

Shader Clock (MHz) |

N/A |

N/A |

N/A |

1242 |

1476 |

1476 |

|

Memory Clock (MHz) |

1150 |

1200 |

1250 |

999 |

1161 |

1242 |

|

Memory Amount |

1024MB - GDDR5 |

1024MB - GDDR5 |

1024MB-GDDR5 |

896MB - GDDR3 |

896MB - GDDR3 |

1024MB - GDDR3 |

|

Memory Interface |

128-bit |

128-bit |

128-bit |

448-bit |

448-Bit |

512-bit |

Crysis Benchmark Results

Crysis uses a new graphics engine: the CryENGINE2, which is the successor to Far Cry's CryENGINE. CryENGINE2 is among the first engines to use the Direct3D 10 (DirectX 10) framework, but can also run using DirectX 9, on Vista, Windows XP and the new Windows 7. As we'll see, there are significant frame rate reductions when running Crysis in DX10. It's not an operating system issue, DX9 works fine in WIN7, but DX10 knocks the frame rates in half.

Roy Taylor, Vice President of Content Relations at NVIDIA, has spoken on the subject of the engine's complexity, stating that Crysis has over a million lines of code, 1GB of texture data, and 85,000 shaders. To get the most out of modern multicore processor architectures, CPU intensive subsystems of CryENGINE 2 such as physics, networking and sound, have been re-written to support multi-threading.

Crysis offers an in-game benchmark tool, which is similar to World in Conflict. This short test does place some high amounts of stress on a graphics card, since there are so many landscape features rendered. For benchmarking purposes, Crysis can mean trouble as it places a high demand on both GPU and CPU resources. Benchmark Reviews uses the Crysis Benchmark Tool by Mad Boris to test frame rates in batches, which allows the results of many tests to be averaged.

Low-resolution testing allows the graphics processor to plateau its maximum output performance, and shifts demand onto the other system components. At the lower resolutions Crysis will reflect the GPU's top-end speed in the composite score, indicating full-throttle performance with little load. This makes for a less GPU-dependant test environment, but it is sometimes helpful in creating a baseline for measuring maximum output performance. At the 1280x1024 resolution used by 17" and 19" monitors, the CPU and memory have too much influence on the results to be used in a video card test. At the widescreen resolutions of 1680x1050 and 1900x1200, the performance differences between video cards under test are mostly down to the cards.

In my review of the reference HD5770, I said I was shocked by these DirectX 10 numbers, but now something has changed. Now I see how to get decent frame rates in this particularly challenging situation: start with an ATI 5770, at a minimum, and put at least two of them in CrossfireX. Running XP-based systems and DirectX 9, the latest generation of video cards was starting to get a handle on Crysis. Certainly, in this test, with no anti-aliasing dialed in, any of the tested cards running in DX9 provided a usable solution. Now, in DX10 only the highest performing boards get close to an average frame rate of 30FPS. It seems like we've gone back in time, back to when only two or three very expensive video cards could run Crysis with all the eye candy turned on. I guess we'll have to wait until CryEngine3 comes out, and is optimized for the current generation of graphics APIs.

Looking at the XFX HD5770 running reference clocks and the overclocked results, we can see that this card does pretty well with Crysis. Even in stock configuration it beats the GTX260, and the overclock pushes the lead out a bit. They both fail to catch the GTX 275, though, hanging about 3 FPS behind. Putting two of them in CrossfireX crushes the competition, at a pretty reasonable price, too. Interestingly, the CrossfireX doesn't scale all that well in this benchmark, but it's enough to sail well past the GTX285 with a 7 FPS lead, and it's the only thing that will get you past 30 FPS at 1900x1200.

Add in some anti-aliasing, 4X to be exact, and all the cards take about a 5 FPS hit. Although the HD5770 still beats out the GTX260, even with stock clocks, it's a pyrrhic victory. No one wants to play this game at 17 or 20 FPS, it's just too choppy. Either throw more hardware at it, or fall back to DirectX9, which I think makes the most sense. I'd rather have smooth frame rates and 4X or 8X anti-aliasing than the small detail improvements DX10 brings to this game.

|

Product Series |

XFX Radeon HD5750 (HD-575X-ZN) |

XFX Radeon HD5770 (HD-577A-ZN) |

XFX Radeon HD5770 (HD-577A-ZN) OC |

ASUS GeForce GTX 260 (ENGTX260 MATRIX) |

MSI GeForce GTX 275 (N275GTX Twin Frozr OC) |

ASUS GeForce GTX 285 (GTX285 MATRIX) |

|

Stream Processors |

720 |

800 |

800 |

216 |

240 |

240 |

|

Core Clock (MHz) |

700 |

850 |

930 |

576 |

666 |

662 |

|

Shader Clock (MHz) |

N/A |

N/A |

N/A |

1242 |

1476 |

1476 |

|

Memory Clock (MHz) |

1150 |

1200 |

1250 |

999 |

1161 |

1242 |

|

Memory Amount |

1024MB - GDDR5 |

1024MB - GDDR5 |

1024MB-GDDR5 |

896MB - GDDR3 |

896MB - GDDR3 |

1024MB - GDDR3 |

|

Memory Interface |

128-bit |

128-bit |

128-bit |

448-bit |

448-Bit |

512-bit |

In our next section, Benchmark Reviews tests with Devil May Cry 4 Benchmark. Read on to see how a blended high-demand GPU test with low video frame buffer demand will impact our test products.

Devil May Cry 4 Benchmark

Devil May Cry 4 was released for the PC platform in early 2007 as the fourth installment to the Devil May Cry video game series. DMC4 is a direct port from the PC platform to console versions, which operate at the native 720P game resolution with no other platform restrictions. Devil May Cry 4 uses the refined MT Framework game engine, which has been used for many popular Capcom game titles over the past several years.

MT Framework is an exclusive seventh generation game engine built to be used with games developed for the PlayStation 3 and Xbox 360, and PC ports. MT stands for "Multi-Thread", "Meta Tools" and "Multi-Target". Originally meant to be an outside engine, but none matched their specific requirements in performance and flexibility. Games using the MT Framework are originally developed on the PC and then ported to the other two console platforms.

On the PC version a special bonus called Turbo Mode is featured, giving the game a slightly faster speed, and a new difficulty called Legendary Dark Knight Mode is implemented. The PC version also has both DirectX 9 and DirectX 10 mode for Windows XP, Vista, and Widows 7 operating systems.

It's always nice to be able to compare the results we receive here at Benchmark Reviews with the results you test for on your own computer system. Usually this isn't possible, since settings and configurations make it nearly difficult to match one system to the next; plus you have to own the game or benchmark tool we used.

Devil May Cry 4 fixes this, and offers a free benchmark tool available for download. Because the DMC4 MT Framework game engine is rather low-demand for today's cutting edge video cards, Benchmark Reviews uses the 1920x1200 resolution to test with 8x AA (highest AA setting available to Radeon HD video cards) and 16x AF.

Devil May Cry 4 is not as demanding a benchmark as it used to be. Only scene #2 and #4 are worth looking at from the standpoint of trying to separate the fastest video cards from the slower ones. Still, it represents a typical environment for many games that our readers still play on a regular basis, so it's good to see what works with it and what doesn't. Any of the tested cards will do a credible job in this application, and the performance scales in a pretty linear fashion. You get what you pay for when running this game, at least for benchmarks. This is one time where you can generally use the maximum available anti-aliasing settings, so NVIDIA users should feel free to crank it up to 16X. The DX10 "penalty" is of no consequence here.

The HD5770 definitely holds its own in this benchmark. The stock clocked XFX HD5770 put in an absolutely equal performance to the GTX260-216, and an overclock puts it solidly between the 260 and the 275. All of this is way above the minimum frame rates for smooth looking graphics, and I have to say this is one game where the DirectX10 version has a noticeable visual advantage over the DX9 version. The second and the fourth scene, especially, are a joy to observe at these frame rates, with all the settings turned up to max. The CrossfireX pair turned in absolutely insane frame rates, well beyond what is required for this game.

|

Product Series |

XFX Radeon HD5750 (HD-575X-ZN) |

XFX Radeon HD5770 (HD-577A-ZN) |

XFX Radeon HD5770 (HD-577A-ZN) OC |

ASUS GeForce GTX 260 (ENGTX260 MATRIX) |

MSI GeForce GTX 275 (N275GTX Twin Frozr OC) |

ASUS GeForce GTX 285 (GTX285 MATRIX) |

|

Stream Processors |

720 |

800 |

800 |

216 |

240 |

240 |

|

Core Clock (MHz) |

700 |

850 |

930 |

576 |

666 |

662 |

|

Shader Clock (MHz) |

N/A |

N/A |

N/A |

1242 |

1476 |

1476 |

|

Memory Clock (MHz) |

1150 |

1200 |

1250 |

999 |

1161 |

1242 |

|

Memory Amount |

1024MB - GDDR5 |

1024MB - GDDR5 |

1024MB-GDDR5 |

896MB - GDDR3 |

896MB - GDDR3 |

1024MB - GDDR3 |

|

Memory Interface |

128-bit |

128-bit |

128-bit |

448-bit |

448-Bit |

512-bit |

Our next benchmark of the series is for a very popular FPS game that rivals Crysis for world-class graphics.

Far Cry 2 Benchmark Results

Ubisoft has developed Far Cry 2 as a sequel to the original, but with a very different approach to game play and story line. Far Cry 2 features a vast world built on Ubisoft's new game engine called Dunia, meaning "world", "earth" or "living" in Farci. The setting in Far Cry 2 takes place on a fictional Central African landscape, set to a modern day timeline.

The Dunia engine was built specifically for Far Cry 2, by Ubisoft Montreal development team. It delivers realistic semi-destructible environments, special effects such as dynamic fire propagation and storms, real-time night-and-day sun light and moon light cycles, dynamic music system, and non-scripted enemy A.I actions.

The Dunia game engine takes advantage of multi-core processors as well as multiple processors and supports DirectX 9 as well as DirectX 10. Only 2 or 3 percent of the original CryEngine code is re-used, according to Michiel Verheijdt, Senior Product Manager for Ubisoft Netherlands. Additionally, the engine is less hardware-demanding than CryEngine 2, the engine used in Crysis. However, it should be noted that Crysis delivers greater character and object texture detail, as well as more destructible elements within the environment. For example; trees breaking into many smaller pieces and buildings breaking down to their component panels. Far Cry 2 also supports the amBX technology from Philips. With the proper hardware, this adds effects like vibrations, ambient colored lights, and fans that generate wind effects.

There is a benchmark tool in the PC version of Far Cry 2, which offers an excellent array of settings for performance testing. Benchmark Reviews used the maximum settings allowed for our tests, with the resolution set to 1920x1200. The performance settings were all set to 'Very High', Render Quality was set to 'Ultra High' overall quality level, 8x anti-aliasing was applied, and HDR and Bloom were enabled. Of course DX10 was used exclusively for this series of tests.

Although the Dunia engine in Far Cry 2 is slightly less demanding than CryEngine 2 engine in Crysis, the strain appears to be extremely close. In Crysis we didn't dare to test AA above 4x, whereas we use 8x AA and 'Ultra High' settings in Far Cry 2. Here we also see the opposite effect, when switching our testing to DirectX 10. Far Cry 2 seems to have been optimized, or at least written with a clear understanding of DX10 requirements.

Using the short 'Ranch Small' time demo (which yields the lowest FPS of the three tests available), all the tested products are capable of producing playable frame rates with the settings all turned up. The Radeon HD5750 hangs close enough to its big brother, the HD5770 in this game to consider it as a lower cost alternative. Although the Dunia engine seems to be optimized for NVIDIA chips, the improvements ATI incorporated in their latest GPUs are enough to allow this game to be played with a mid-range card. The overclocked XFX HD5770 gains a few useful FPS over the stock settings, and as usual, the Crossfired pair puts up stunning numbers that are well past the GTX285 card.

|

Product Series |

XFX Radeon HD5750 (HD-575X-ZN) |

XFX Radeon HD5770 (HD-577A-ZN) |

XFX Radeon HD5770 (HD-577A-ZN) OC |

ASUS GeForce GTX 260 (ENGTX260 MATRIX) |

MSI GeForce GTX 275 (N275GTX Twin Frozr OC) |

ASUS GeForce GTX 285 (GTX285 MATRIX) |

|

Stream Processors |

720 |

800 |

800 |

216 |

240 |

240 |

|

Core Clock (MHz) |

700 |

850 |

930 |

576 |

666 |

662 |

|

Shader Clock (MHz) |

N/A |

N/A |

N/A |

1242 |

1476 |

1476 |

|

Memory Clock (MHz) |

1150 |

1200 |

1250 |

999 |

1161 |

1242 |

|

Memory Amount |

1024MB - GDDR5 |

1024MB - GDDR5 |

1024MB-GDDR5 |

896MB - GDDR3 |

896MB - GDDR3 |

1024MB - GDDR3 |

|

Memory Interface |

128-bit |

128-bit |

128-bit |

448-bit |

448-Bit |

512-bit |

Our next benchmark of the series puts our collection of video cards against some fresh graphics in the newly released Resident Evil 5 benchmark.

Resident Evil 5 Benchmark Results

PC gamers get the ultimate Resident Evil package in this new PC version with exclusive features including NVIDIA's new GeForce 3D Vision technology (wireless 3D Vision glasses sold separately), new costumes and a new mercenaries mode with more enemies on screen. Delivering an infinite level of detail, realism and control, Resident Evil 5 is certain to bring new fans to the series. Incredible changes to game play and the world of Resident Evil make it a must-have game for gamers across the globe.

Years after surviving the events in Raccoon City, Chris Redfield has been fighting the scourge of bio-organic weapons all over the world. Now a member of the Bio-terrorism Security Assessment Alliance (BSSA), Chris is sent to Africa to investigate a biological agent that is transforming the populace into aggressive and disturbing creatures. New cooperatively-focused game play revolutionizes the way that Resident Evil is played. Chris and Sheva must work together to survive new challenges and fight dangerous hordes of enemies.

From a gaming performance perspective, Resident Evil 5 uses Next Generation of Fear - Ground breaking graphics that utilize an advanced version of Capcom's proprietary game engine, MT Framework, which powered the hit titles Devil May Cry 4, Lost Planet and Dead Rising. The game uses a wider variety of lighting to enhance the challenge. Fear Light as much as Shadow - Lighting effects provide a new level of suspense as players attempt to survive in both harsh sunlight and extreme darkness. As usual, we maxed out the graphics settings on the benchmark version of this popular game, to put the hardware through its paces. Much like Devil May Cry 4, it's relatively easy to get good frame rates in this game, so take the opportunity to turn up all the knobs and maximize the visual experience.

The Resident Evil5 benchmark tool provides a graph of continuous frame rates and averages for each of four distinct scenes. In addition it calculates an overall average for the four scenes. The overall average is what we report here, as the scenes were pretty evenly matched and no scene had results that were so far above or below the average as to present a unique situation.

The 1680x1050 test results from this game scale almost as linearly as a synthetic benchmark. The one "lump" in the graph is the overclocked XFX HD5770, standing just a little taller than its brothers on the right and left. The HD5770 trails the GTX260 in its stock form, but once we turn up the clocks to even up the odds, the HD5770 pulls even with the already overclocked GTX260. The GTX275 and 285 do very well in this game, beating both new ATI offerings easily, at a substantial price penalty, though. Once again, two 5770s in Crossfire clean house with frame rates that are beyond reproach.

|

Product Series |

XFX Radeon HD5750 (HD-575X-ZN) |

XFX Radeon HD5770 (HD-577A-ZN) |

XFX Radeon HD5770 (HD-577A-ZN) OC |

ASUS GeForce GTX 260 (ENGTX260 MATRIX) |

MSI GeForce GTX 275 (N275GTX Twin Frozr OC) |

ASUS GeForce GTX 285 (GTX285 MATRIX) |

|

Stream Processors |

720 |

800 |

800 |

216 |

240 |

240 |

|

Core Clock (MHz) |

700 |

850 |

930 |

576 |

666 |

662 |

|

Shader Clock (MHz) |

N/A |

N/A |

N/A |

1242 |

1476 |

1476 |

|

Memory Clock (MHz) |

1150 |

1200 |

1250 |

999 |

1161 |

1242 |

|

Memory Amount |

1024MB - GDDR5 |

1024MB - GDDR5 |

1024MB-GDDR5 |

896MB - GDDR3 |

896MB - GDDR3 |

1024MB - GDDR3 |

|

Memory Interface |

128-bit |

128-bit |

128-bit |

448-bit |

448-Bit |

512-bit |

Our next benchmark of the series features a strategy game with photorealistic graphics: World in Conflict.

World in Conflict Benchmark Results

The latest version of Massive's proprietary Masstech engine utilizes DX10 technology and features advanced lighting and physics effects, and allows for a full 360 degree range of camera control. Massive's MassTech engine scales down to accommodate a wide range of PC specifications, if you've played a modern PC game within the last two years, you'll be able to play World in Conflict.

World in Conflict's FPS-like control scheme and 360-degree camera make its action-strategy game play accessible to strategy fans and fans of other genres... if you love strategy, you'll love World in Conflict. If you've never played strategy, World in Conflict is the strategy game to try.

Based on the test results charted below it's clear that WiC doesn't place a limit on the maximum frame rate (to prevent a waste of power) which is good for full-spectrum benchmarks like ours, but bad for electricity bills. The average frame rate is shown for each resolution in the chart below. World in Conflict just begins to place demands on the graphics processor at the 1680x1050 resolution, so we'll skip the low-res testing.

The GT200 series GPUs from NVIDIA seem to have a distinct advantage with the World In Conflict benchmark. Both the standard and overclocked XFX HD5770 pull acceptable frame rates, though, even if they can't catch up to a GTX260. It looks like something is not optimized in this benchmark for the latest ATI cards, until you see the CrossfireX results. The new Juniper-based cards scale really well in this game, and kick the GTX285 to the curb.

|

Product Series |

XFX Radeon HD5750 (HD-575X-ZN) |

XFX Radeon HD5770 (HD-577A-ZN) |

XFX Radeon HD5770 (HD-577A-ZN) OC |

ASUS GeForce GTX 260 (ENGTX260 MATRIX) |

MSI GeForce GTX 275 (N275GTX Twin Frozr OC) |

ASUS GeForce GTX 285 (GTX285 MATRIX) |

|

Stream Processors |

720 |

800 |

800 |

216 |

240 |

240 |

|

Core Clock (MHz) |

700 |

850 |

930 |

576 |

666 |

662 |

|

Shader Clock (MHz) |

N/A |

N/A |

N/A |

1242 |

1476 |

1476 |

|

Memory Clock (MHz) |

1150 |

1200 |

1250 |

999 |

1161 |

1242 |

|

Memory Amount |

1024MB - GDDR5 |

1024MB - GDDR5 |

1024MB-GDDR5 |

896MB - GDDR3 |

896MB - GDDR3 |

1024MB - GDDR3 |

|

Memory Interface |

128-bit |

128-bit |

128-bit |

448-bit |

448-Bit |

512-bit |

Our last benchmark of the series brings DirectX 11 into the mix, a situation that only the ATI cards under test are capable of handling.

BattleForge - Renegade Benchmark Results

In anticipation of the Release of DirectX 11 with Windows 7 and coinciding with the release of AMD's ATI HD 5870, BattleForge has been updated to allow it to run using DirectX 11 on supported hardware. Well what does all of this actually mean you may ask? It gives us a sip of water from the Holy Grail of game designing and computing in general: greater efficiency! What does this mean for you? It means that that the game will demonstrate a higher level of performance for the same processing power, which in turn allows more to be done with the game graphically. In layman's terms the game will have a higher frame rate and new ways of creating graphical effects, such as shadows and lighting. The culmination of all of this is a game that both runs and looks better. The game is running on a completely new graphics engine that was built for BattleForge.

BattleForge is a next-gen real time strategy game, in which you fight epic battles against evil along with your friends. What makes BattleForge special is that you can assemble your army yourself: the units, buildings and spells in BattleForge are represented by collectible cards that you can trade with other players. BattleForge is developed by EA Phenomic. The studio was founded by Volker Wertich, father of the classic "The Settlers" and the SpellForce series. Phenomic has been an EA studio since August 2006.

BattleForge was released on Windows in March 2009. On May 26, 2009, BattleForge became a Play 4 Free branded game with only 32 of the 200 cards available. In order to get additional cards, players will now need to buy points on the BattleForge website. The retail version comes with all of the starter decks and 3,000 BattleForge points.

Never mind the DX10 v. DX11 question, the real news here is that this game was almost certainly developed exclusively on ATI hardware, and it shows. The stock XFX HD5770 trumps the GTX285, and the overclocked one goes one better. CrossfireX scales way past 80% at both widescreen resolutions, and puts this game into the frame rate range where it runs quite smoothly.

The BattleForge benchmark itself is a tough one, once all the settings are maxed out. The graphics are suitably impressive; even though they were developed primarily on the DirectX 10 platform. In case you are wondering, these results are with SSAO "On" and set to the Very High setting. I know the NVIDIA cards do a little better when SSAO is set to "Off", and I will eventually get around to posting a full set of results with this setting. Personally though, I think the writing is on the wall as far as DirectX 11 goes, and if there isn't going to be a level playing field for 3-4 months, it's not ATI's fault. I mean, who DIDN'T know, more than a year ago, that Windows 7 and DirectX 11 were coming?

|

Product Series |

XFX Radeon HD5750 (HD-575X-ZN) |

XFX Radeon HD5770 (HD-577A-ZN) |

XFX Radeon HD5770 (HD-577A-ZN) OC |

ASUS GeForce GTX 260 (ENGTX260 MATRIX) |

MSI GeForce GTX 275 (N275GTX Twin Frozr OC) |

ASUS GeForce GTX 285 (GTX285 MATRIX) |

|

Stream Processors |

720 |

800 |

800 |

216 |

240 |

240 |

|

Core Clock (MHz) |

700 |

850 |

930 |

576 |

666 |

662 |

|

Shader Clock (MHz) |

N/A |

N/A |

N/A |

1242 |

1476 |

1476 |

|

Memory Clock (MHz) |

1150 |

1200 |

1250 |

999 |

1161 |

1242 |

|

Memory Amount |

1024MB - GDDR5 |

1024MB - GDDR5 |

1024MB-GDDR5 |

896MB - GDDR3 |

896MB - GDDR3 |

1024MB - GDDR3 |

|

Memory Interface |

128-bit |

128-bit |

128-bit |

448-bit |

448-Bit |

512-bit |

In our next section, we investigate the thermal performance of the Radeon HD5770, and see if that half-size 40nm GPU die still runs cool, once it's overclocked. The GPU cooler hides a secret advantage, as we'll see.

XFX Radeon HD5770 Temperature

It's hard to know exactly when the first video card got overclocked, and by whom. What we do know is that it's hard to imagine a computer enthusiast or gamer today that doesn't overclock their hardware. Of course, not every video card has the head room. Some products run so hot that they can't suffer any higher temperatures than they generate straight from the factory. This is why we measure the operating temperature of the video card products we test.

We've already seen in our previous reviews of Juniper-based video cards that the half pint chip runs impressively cool, paired with fairly basic cooling components. Now that we've overclocked both the GPU and the memory, we need an extra margin of error in the cooling department. Normally, the stock blower runs about 1200 RPM, and increases with higher loads to somewhere between 1600 and 1700 RPM, depending on how hard you push the card in its stock configuration. I wanted to see how cool I could keep the GPU with maximum overclocks, so I clicked on the "Enable Manual Fan Control" check box and zoomed that fan up to 100%, which I found out is 4000 RPM. Loud, yes? Effective, yes? Would I eventually end up trying to turn it down, until it made a difference in stability, yes? For now, let's just revel in the fact that overkill settings are readily available in the factory driver package.

To begin testing, I use GPU-Z to measure the temperature at idle as reported by the GPU. Next I use FurMark 1.7.0 to generate maximum thermal load and record GPU temperatures at high-power 3D mode. The ambient room temperature remained stable at 22C throughout testing. The ATI Radeon HD5770 video card recorded 31C in idle 2D mode, and increased to 51C after 20 minutes of stability testing in full 3D mode, at 1920x1200 resolution and the maximum MSAA setting of 8X. I don't think I need to tell you that 51C is an astonishingly low temperature for an overclocked, mid-range gaming card getting kicked around by FurMark. This cooling package can take everything you and Juniper can throw at it.

FurMark is an OpenGL benchmark that heavily stresses and overheats the graphics card with fur rendering. The benchmark offers several options allowing the user to tweak the rendering: fullscreen / windowed mode, MSAA selection, window size, duration. The benchmark also includes a GPU Burner mode (stability test). FurMark requires an OpenGL 2.0 compliant graphics card with lot of GPU power! As an oZone3D.net partner, Benchmark Reviews offers a free download of FurMark to our visitors.

FurMark does do two things extremely well: drive the thermal output of any graphics processor higher than any other application or video game, and it does so with consistency every time. While FurMark is not a true benchmark tool for comparing different video cards, it still works well to compare one product against itself using different drivers or clock speeds, or testing the stability of a GPU, as it raises the temperatures higher than any program. But in the end, it's a rather limited tool.

In our next section, we discuss electrical power consumption and learn how well (or poorly) each video card will impact your utility bill...

VGA Power Consumption

Life is not as affordable as it used to be, and items such as gasoline, natural gas, and electricity all top the list of resources which have exploded in price over the past few years. Add to this the limit of non-renewable resources compared to current demands, and you can see that the prices are only going to get worse. Planet Earth is needs our help, and needs it badly. With forests becoming barren of vegetation and snow capped poles quickly turning brown, the technology industry has a new attitude towards suddenly becoming "green". I'll spare you the powerful marketing hype that I get from various manufacturers every day, and get right to the point: your computer hasn't been doing much to help save energy... at least up until now.

To measure isolated video card power consumption, Benchmark Reviews uses the Kill-A-Watt EZ (model P4460) power meter made by P3 International. A baseline test is taken without a video card installed inside our computer system, which is allowed to boot into Windows and rest idle at the login screen before power consumption is recorded. Once the baseline reading has been taken, the graphics card is installed and the system is again booted into Windows and left idle at the login screen. Our final loaded power consumption reading is taken with the video card running a stress test using FurMark. Below is a chart with the isolated video card power consumption (not system total) displayed in Watts for each specified test product:

VGA Product Description(sorted by combined total power) |

Idle Power |

Loaded Power |

|---|---|---|

NVIDIA GeForce GTX 480 SLI Set |

82 W |

655 W |

NVIDIA GeForce GTX 590 Reference Design |

53 W |

396 W |

ATI Radeon HD 4870 X2 Reference Design |

100 W |

320 W |

AMD Radeon HD 6990 Reference Design |

46 W |

350 W |

NVIDIA GeForce GTX 295 Reference Design |

74 W |

302 W |

ASUS GeForce GTX 480 Reference Design |

39 W |

315 W |

ATI Radeon HD 5970 Reference Design |

48 W |

299 W |

NVIDIA GeForce GTX 690 Reference Design |

25 W |

321 W |

ATI Radeon HD 4850 CrossFireX Set |

123 W |

210 W |

ATI Radeon HD 4890 Reference Design |

65 W |

268 W |

AMD Radeon HD 7970 Reference Design |

21 W |

311 W |

NVIDIA GeForce GTX 470 Reference Design |

42 W |

278 W |

NVIDIA GeForce GTX 580 Reference Design |

31 W |

246 W |

NVIDIA GeForce GTX 570 Reference Design |

31 W |

241 W |

ATI Radeon HD 5870 Reference Design |

25 W |

240 W |

ATI Radeon HD 6970 Reference Design |

24 W |

233 W |

NVIDIA GeForce GTX 465 Reference Design |

36 W |

219 W |

NVIDIA GeForce GTX 680 Reference Design |

14 W |

243 W |

Sapphire Radeon HD 4850 X2 11139-00-40R |

73 W |

180 W |

NVIDIA GeForce 9800 GX2 Reference Design |

85 W |

186 W |

NVIDIA GeForce GTX 780 Reference Design |

10 W |

275 W |

NVIDIA GeForce GTX 770 Reference Design |

9 W |

256 W |

NVIDIA GeForce GTX 280 Reference Design |

35 W |

225 W |

NVIDIA GeForce GTX 260 (216) Reference Design |

42 W |

203 W |

ATI Radeon HD 4870 Reference Design |

58 W |

166 W |

NVIDIA GeForce GTX 560 Ti Reference Design |

17 W |

199 W |

NVIDIA GeForce GTX 460 Reference Design |

18 W |

167 W |

AMD Radeon HD 6870 Reference Design |

20 W |

162 W |

NVIDIA GeForce GTX 670 Reference Design |

14 W |

167 W |

ATI Radeon HD 5850 Reference Design |

24 W |

157 W |

NVIDIA GeForce GTX 650 Ti BOOST Reference Design |

8 W |

164 W |

AMD Radeon HD 6850 Reference Design |

20 W |

139 W |

NVIDIA GeForce 8800 GT Reference Design |

31 W |

133 W |

ATI Radeon HD 4770 RV740 GDDR5 Reference Design |

37 W |

120 W |

ATI Radeon HD 5770 Reference Design |

16 W |

122 W |

NVIDIA GeForce GTS 450 Reference Design |

22 W |

115 W |

NVIDIA GeForce GTX 650 Ti Reference Design |

12 W |

112 W |

ATI Radeon HD 4670 Reference Design |

9 W |

70 W |

The XFX Radeon HD5770 pulled 22 (119-97) watts at idle and 115 (212-97) watts when running full out, using the test method outlined above. These numbers are very close to the factory numbers of 18W at idle and 108W under load, and are the exact same results I got when I tested the reference card from ATI. This is one area where these new 40nm cards excel. If you keep your computer running most of the day and/or night, this card could easily save you 1 kWh per day in electricity.

Radeon HD5770 Final Thoughts

The alternative title for this review could have been: "What Price DirectX 10?" or "Who Killed Crysis?". I know the big news is DirectX 11, and how it is a major advancement in both image quality and coding efficiency, but for the time being, we're stuck in a DirectX 10 world, for the most part. DX11 games won't be thick on the ground for at least a year, and some of us are going to continue playing our old favorites. So, with the switch to Windows 7, what's the impact on gaming performance? So far, it's a bit too random for my tastes.

We seem to be back to a situation where the software differences between games have a bigger influence on performance than hardware and raw pixel processing power. As the adoption rate for Windows 7 ramps up, more and more gamers are going to be wondering if DirectX 10 is a blessing or a curse. Crysis gets cut off at the knees, but Far Cry 2 gets a second wind with DX10. World In Conflict holds back its best game play for NVIDIA customers, but BattleForge swings the other way, with DX10 and DX11.

I have a feeling this is why gamers resolutely stuck with Windows XP, and never warmed up to Vista. It wasn't the operating system per se, as much as it was DirectX 10. And I want to clarify; there's probably nothing inherently wrong with DX10, it's just that so few games were designed to use it effectively. The other problem is that, unlike other image enhancing features, DirectX has no sliding scale. I can't select 2x or 4x or 8x, to optimize the experience, it's either all in, or all out.

The good news is that the adoption rate for Windows 7 will probably set records, if anyone is keeping score. Combine that with the real-world benefit to software coders that DirectX 11 brings, and there is a good probability that we won't be stuck in DX10 land for very long. New graphics hardware from both camps, a new operating system, a new graphics API, and maybe an economic recovery in the works? It's going to be an interesting holiday season, this year!

XFX Radeon HD5770 Conclusion

The performance of the XFX HD5770 is more than adequate for most game titles. In stock form it doesn't quite match an overclocked GTX260, but as soon as I leveled the playing field with a modest overclock in ATI Overdrive, it was more than competitive. Just keep reminding yourself that these are mid-range GPUs with only half the horsepower of the Cypress. I think people were expecting more from the Juniper; in fact right up until launch, rumors consistently called for 1120 shaders, which would have meant that it was 70% of a Cypress GPU, not the 50% that it turned out to be. There's a $100 gap between the HD5770 and the HD5850, which needs to be filled by ATI, so don't expect the HD5770 to compete with the HD4890 or the GTX275, at least on frame rates.

Performance is more than just frames-per-second, though; the ability to run 2-3 monitors with Full ATI EyeFinity Support counts, too. Plus, we've been measuring performance with Beta drivers. If you've read some of my recent video card reviews, you've got a better understanding of why driver performance on launch day is not a good measure of the final product. The raw performance numbers are plenty good enough for the target price point today, and I predict even better things to come for both price and performance.

The appearance of the product itself is top notch. The ATI reference cooler is transformed, once XFX got their graphics artists to work up a product label; they improved the appearance by a large margin. The full cover provides plenty of space for almost unlimited choice in graphics and XFX took full advantage of it. The reference design has plenty of cooling capacity for the tiny Juniper GPU, even with the higher GPU overclock, once the fan is ramped up.

The build quality of the XFX Radeon 5770 is much better than the engineering sample I received before the launch date. The retail version XFX is putting out had no quality issues I could detect. The parts were all high quality, and the PC board was manufactured and assembled with care and precision.

The features of the HD5770 are amazing, having been carried over in full measure from the HD5800 series: DirectX 11, Full ATI Eyefinity Support, ATI Stream Technology Support, DirectCompute 11 and OpenCL Support, HDMI 1.3 with Dolby True HD and DTS Master Audio. We've barely scratched the surface in this review of all the capability on offer, by focusing almost exclusively on gaming performance, but the card has other uses as well. Speaking of gaming though, I was a little disappointed that XFX didn't include a coupon for DiRT 2, as some other ATI partners are doing. It's one of the DirectX 11 titles I'm most looking forward to playing.

As of late October, Newegg is selling the XFX Radeon HD5770 at $179.99, which is $15 higher than several others vendors. XFX has always commanded a premium for their cards, because of their enthusiast-based support model. They offer a double lifetime warranty, which is quite useful for enthusiasts that buy and sell the latest hardware on a regular basis. The second owner gets the second full lifetime warranty. That's a very nice benefit if you know the guy that owned it before you ran it 24/7, highly overclocked at full load, loading up on points in Folding@Home. I think this is less likely to be an issue with a card in this price range, but it does explain the price premium a bit.

The XFX Radeon HD5770 earns a Silver Tachometer Award, because it fills an important slot in the graphics card middle ground at a price that most casual users won't have to think too hard about. With the launch of Windows 7 and its DirectX 11 interface, anyone who wants to take advantage of the advanced features becoming more prevalent in the next 4-6 months needs new hardware. If you're shopping in this price range, the HD5770 is the only card to get; every other choice is going to cost more or do less.

Pros:

+ Unmatched feature set

+ Extremely low power consumption

+ 1GB of GDDR5 memory

+ Easy to overclock and CrossfireX

+ Lots of cooling headroom

+ Requires only one 6-pin power connector

+ HDMI and DisplayPort native interfaces

+ Good looks never hurt anybody

+ Most heat is exhausted outside the case

Cons:

- No voltage control in ATI Overdrive, no XFX tool available

- Premium pricing at launch

Ratings:

-

Performance: 8.50

-

Appearance: 9.00

-

Construction: 9.00

-

Functionality: 9.50

-

Value: 8.75

Final Score: 8.95 out of 10.

Quality Recognition: Benchmark Reviews Silver Tachometer Award.

Questions? Comments? Benchmark Reviews really wants your feedback. We invite you to leave your remarks in our Discussion Forum.

Related Articles:

- AMD Phenom II X3 720 BE Black Edition AM3 CPU

- Rosewill 10.4-Inch LCD Photo Frame RDF-104

- Zalman CNPS9900 MAX 135mm CPU Cooler

- Guide: How to shop for your first HDTV

- Leawo Total Media Converter Ultimate

- SteelSeries NP+ Rough Gaming Surface Mouse Pad

- Synology Cube Station CS407 4-Bay SATA Gigabit NAS

- CM Storm Scout 2 Gaming PC Case

- QNAP TS-879U-RP 10GbE NAS Server

- Sapphire Radeon HD 4890 RV790 Video Card

Comments

better board good cooling with 2 x 5750"S SLI 100W whew come on one card/HP/green top of the line!! great for us lower price than two cards and eought to go 4 D common ATI GO Green!!!off the hook you win the frist and second round if you should need help please e mail me i will walk you thought it but you must share a few with me as rewards please I really hope i will here from you I will put you on the top of the mountain oh oh oh oh oh lol PLEASE !!!

kick it up please before the HD6730

I know that when you buy a new product you expect it to work, but even there are always 'lemons'.

Anyhow, Could you explain to me exactly what I need to hook up 3 monitors and I also want to hook up my Sony Bravia part time.

I keep hearing about a Display Port, do I need to buy this? What cables? Right now my main monitor(NEC22WMGX) is in the center and is using an HDMI cable. I have 2 other monitors which are on the left and right and these are Mid Range LG 22's and these are both using VGa cables right now but I can only hoop up one or the other. All 3 will not display this way..

Thanks! Awesome article! I am all set to go out and by a second hd5770!

Webdevoman

PS-I hope you don't mind me asking for support here.

the legacy outputs(VGA, DVI, HDMI) requires each output to have it's own reference clock, while displayport doesn't require one for each output. One graphics card has only 2 reference clocks. Therefore, if you want more than 2 displays, one has to use displayport...

Also, converting from displayport to VGA/HDMI/DVI isn't that simple either. Due to the above problem and other un-compatibilities, you need either a passive or active converter... google to find out what you need for your outputs...

if your graphics card only have DVI, VGA, HDMI(or a mixture of the three), you CAN ONLY output to a maximum of 2 screens.

The video card I have is the same one in this review, HD5770 and I just checked, my Dell ultrasharp has a displayport. Does the fact that my monitor has a display port mean that I can just buy a displayport cable?

If I hook up a displayport cable to the UltraSharp can I hook 2 other monitors with DHMI or will I have to use one with hdmi and one with dvi or vga??

Thank you so much. I was ready to go out and spend $130 on a powered display port adapter and I don't think I need one now..

Great blog. I will be reading all of the reviews here from now on..

Thanks

Yes, you can buy a DisplayPort cable and it will work perfectly for hooking up your Dell monitor to this card. Dell has been in the forefront with DisplayPort, and their monitors are one of the few choices we have at the moment.

I think you can get a DVI to HDMI adapter if you need to add two more monitors that only have HDMI inputs. Otherwise just hook one up with HDMI and the other with DVI. The actual video signals are basically the same for these two.

Thanks so much for your help.

I ended up buying a Dell Active Display Port to DVI and I finally have three monitors hooked up. The problem I am having now is with resolution. When I put the powered displayport monitor(LG L227WTG) up to standard resolution the darn things starts shutting off every 30 seconds or so.

I think eventually when I have the cash I will just purchase another 5770 and use the crossfire set up. This 3 monitor display has been a nightmare and after spending money on this adapter and cables I am still left with a subpar set up..

Any ideas on how to fix this res issue? My current set up is

1 HDMI

1 Display port using DVI

1 DVI

This site has been an awesome source of information for me on this and other hardware as well..

Thanks

BTW, I think you need to have the same VERTICAL resolution on all three displays. Is that the resolution you are trying to get on the Dell? Can you set them all the same?

Because someone has issues hooking up 3 monitors or someone has a stability issue it means "they are not ready for this card and should stick with 4###"? This is a really #y statement and perhaps you should keep your opinions to yourself if they are related to people and not tech.

In regards to the card, it works well with multiple monitors but with my powered display port I cannot get the res past 1440.

I am concidering a crossfire set up which would allow me to get rid of the powered display port adapter. Prices are pretty decent on this card now too..

Thanks for the help and review,

Rich

Thanks for the reminder, Rich. Please smile next time though, OK? {8^)

In my defense though I will just say that I have been building pc's since the 90's and I researched this card but I did nit anticipate the issues I would have using 3 monitors. I have been using dual monitors for as long as I can remember and I never had the need for a powered display port..

I had no issues what so ever installing the card, tweaking or even over clocking. My only issue is the third monitor and I have read everything about it. Plugging it into a powered USB terminal doesn't work and nothing else I've tried works either.

I believe the powered display port adapter just doesn't have the power to run any resbeyond 1440..

Here is a smile... :)

I've only gotten it up to 875/1250, so far...

Check it out....

##youtube.com/watch?v=aYorUpN4PQo

It makes no difference if I set up as a group so it is like one giant monitor of ir I set up individually.. If the res is too high the dam thing will not work. I wasted over $100 on the stupid BizLink active display adapter..

It was a helpful video though for someone just setting up but it was an over statement to say "all our questions would be answered"..You had my hopes up for a minute there..

Thanks,

Rich

Talk Internet Community Development

Using 2 Dell lcd's, an Intel i7 930 chip on an Asus P6T SE mobo I installed fresh last month.Using a 500W PS. I'm stuck. Any clues?

Good luck.

I was using a Geforce 9600 GSO till I tried to boot this morning and drew blank screens. Had a odd screen resolution self change last night but resetting that seemed to return it to normal. Without being a genius I figgered it was the Geforce. The PS was slightly borderline for it but it seemed to hold up ok. I also upgraded the PS today with a 500W Antec before I installed the Radeon.

I read that I should check my bios but it's kinda hard to do that when you can't see inside. hehe

Sure appreciate your quick response.

My guess would be bios or motherboard..

Thanks

Can anyone enlighten me with why this is? I figure it's an older card.

thanks

I do have another question, could you set up X-Fire using the older HD (2tab) models? Or setting up an upgraded Radeon HD (HD 8 or 9 series) to the card?

I doubt that you will be able to crossfire an HD8xxx with an HD5xxx series. Currently you cannot crossfire an HD5xxx with an HD4xxx card.

dave

2) update your blue ray drivers (from the website) not the CD.

That all I did and it works.

If I were you I would look into a new Blue Ray program or return your Blue Ray Player.

Sorry

Thinking of using Nvidia instead because I am running an intel chipset.

Any thoughts?

I have had no problems at all with the latest drivers... I know a few others with the same card and drives and they are all ...

Maybe you will have better luck with a fresh install of the latest driver..

Please post your results, I too am waiting on a couple more 5770's and you are scaring me! lol

Thanks,

Rich

Talk Internet Community Development

I know for a fact that this video card is not bad. I have tried all visually intense games I own and they run flawlessly but, for example, I open firefox and go to one of those stupid facebook games everyone hates but wont admit it... it would kick to the BSOD.

Benchmarked the card to 105 celcius and it held strong.

Definitely driver related. I will load the new driver when I receive the cards later in the week and post my results with those cards in xfire mode as well as the 3800 series in xfire mode.

Still having a few problems with the 3870's on the wife's computer but the VPN recovery can usually get it straight. Continuing to run 10.10 for those 2 cards and haven't had any BSOD problems.

I don't really understand much about this, so can anyone tell me if I got the right card? Will I need any extra hardware to install this? I'm really just interested in playing wow at good settings. It would be nice to play on max settings. Would that be possible with this card? Thanks ahead of time.

Jeremy

I was also wondering if you can tell me the ideal temperature range the card is supposed to be at?

Thanks so much again OLIN.

I am most gratefully yours,

Chris